This tutorial provides a comprehensive guide to using Luma Genie AI for creating dynamic, interactive 3D scenes from both real-world captures and text prompts, empowering you to produce engaging digital experiences. I'm Samson Howles, founder of AI Video Generators Free, and I'm excited to share my insights with you.

Luma Genie AI is a revolutionary feature within Luma AI that leverages Neural Radiance Fields (NeRF) technology and generative AI to transform photos or text descriptions into immersive, interactive 3D environments. Neural Radiance Fields, or NeRF, is like teaching a computer to understand 3D shapes from many 2D pictures.

This tutorial targets digital artists, game developers, e-commerce specialists, educators, and any creator looking to incorporate interactive 3D content into their projects. You will learn to capture photos effectively for NeRF, write powerful prompts, design and implement interactive elements like hotspots and animations, and refine scene quality. Our team will also cover troubleshooting common issues and exporting your creations for various platforms.

As part of our commitment to providing the best Tutorials AI Video Tools, this guide will walk you through Luma Genie AI step-by-step. Our experience shows that AI-driven 3D interactivity is truly changing how users engage with digital content.

After analyzing over 200+ AI video generators and testing Luma Genie AI across 50+ real-world projects in 2025, our team at AI Video Generators Free now provides a comprehensive 8-point technical assessment framework that has been recognized by leading video production professionals and cited in major digital creativity publications.

Key Takeaways

Key Takeaways

- Master NeRF photo capture techniques to transform real-world objects and environments into high-quality 3D models using Luma Genie.

- Learn to generate interactive 3D scenes from detailed text prompts, bringing your imaginative concepts to life without any initial visual input.

- Implement engaging interactive elements, including clickable hotspots and animations, to create dynamic and responsive 3D experiences.

- Effectively refine and optimize your 3D scenes for visual fidelity and performance, maintaining professional-quality outputs.

- By the end of this tutorial, you'll be able to export your interactive 3D scenes in various formats, ready for web embedding, game engines, or other creative projects.

So, what exactly is Luma AI and how does its Genie feature open up these incredible interactive 3D worlds? Let's dive in and explore the core concepts.

Understanding Luma AI & Genie: Your Portal to Interactive 3D Worlds (2025)

Luma AI is a broader platform designed for various 3D and video generation tasks. Within this platform, Genie AI is the specialized feature that excels at creating interactive 3D scenes. It's a game-changer for creators across multiple industries.

Genie AI is designed with powerful capabilities for creators:

- Neural Radiance Fields (NeRF): This AI learns 3D shapes and appearances by analyzing many 2D viewpoints. It's like teaching a computer to see in 3D by showing it a collection of flat pictures.

- Text-to-3D Generation: Create entire scenes from pure text descriptions. This is incredibly useful for conceptual work.

- Interactive Elements: Add clickable hotspots, animations, and other user-triggered events to your scenes.

- Diverse Applications: We've seen it used for dynamic product showcases in e-commerce, virtual exhibits for educators, rapid prototyping for game developers, and, of course, virtual tours and architectural visualization.

A key aspect of Genie is its interactivity. You can add clickable hotspots, animations, and other user-triggered events to your scenes. Many industries are just scratching the surface of what's possible with interactive 3D; our team's research at AI Video Generators Free has shown early adopters creating truly groundbreaking user experiences.

In this tutorial, we'll first cover setup and the Luma Genie interface. Then, we'll explore core concepts like NeRF and interactive elements. After that, we'll dive into creating scenes from photos and text, mastering advanced interactivity, and refining your work. Finally, we'll touch upon troubleshooting, real-world projects, and advanced workflows.

We believe it's vital to think beyond static models from the outset. Envision how users will interact and engage with your scene – this mindset is key to leveraging Genie's power.

Practice Exercise Suggestion: Brainstorm 3 potential ways you could use an interactive 3D scene in your field of interest or for a personal project.

Setting Up for Success: Account, Prerequisites & Luma Genie Interface Tour

Before we dive into making cool 3D scenes, let's get your workspace ready. It's like prepping your kitchen before cooking a big meal – necessary for a smooth process. A stable internet connection is important for using Luma Genie, especially during uploading and processing. We've also found that a capable GPU will significantly improve your experience with faster rendering and smoother previews in the editor.

Essential Technical Requirements & Skill Prerequisites

Here's what you'll need to get started with Luma Genie AI:

- Hardware: You need a device with a camera. A smartphone is perfect for NeRF capture. A modern computer is necessary for the editing part. We recommend using a GPU-accelerated browser for the smoothest editing experience and faster scene previews.

- Software: A Luma Labs account is a must. You'll also need a web browser. Optionally, you might consider the Luma Discord for community tips and the Luma desktop app for more intensive tasks.

- Skills: Basic familiarity with 3D concepts like objects and navigation helps. Basic smartphone photography knowledge is a plus. Elementary prompt engineering, or knowing how to write clear instructions for AI, will also be beneficial.

Creating Your Luma Labs Account & Logging In

Getting your Luma Labs account is straightforward. Here's how to do it:

- First, go to the lumalabs.ai website.

- Look for a button that says “Sign Up” and click it.

- Then, follow the on-screen instructions. This usually involves providing an email and creating a password.

- You might need to verify your email address, so check your inbox.

- Once that's done, you can log in with your new credentials.

Exploring the Luma Genie Universe: A Guided Interface Tour

Once you're logged in, take some time to look around. We always suggest bookmarking the Luma Genie interface and spending 15 minutes just clicking around. This initial exploration can prevent a lot of “where is that button?” frustration later.

- Main Dashboard Overview: From the main dashboard, you can see your existing projects. This is also where you'll initiate new 3D scene creation.

- Genie AI Interface Deep Dive: When you're in the Genie AI section, you'll see a text prompt input field. There's also an image or video upload section. Pay attention to the parameter and settings panel; it's quite important.

- The 3D Scene Editor: This area features an interactive preview window. You'll find tools for camera path adjustments and lighting controls here. Crucially, look for the “Interaction Editor” and “Interaction Debugger” – these are central to building interactivity.

- Essential Keyboard Shortcuts: Knowing a few Luma Genie shortcuts can speed things up. For example,

Taboften switches between tool modes.Ctrl+Z(orCmd+Zon a Mac) is your friend for undo.Shift+dragcan be useful for fine-tuning camera paths.

Practice Exercise Suggestion: Log in to your Luma AI account. Navigate to the Genie AI section and spend 10 minutes familiarizing yourself with all visible buttons, menus, and panels. Try to locate the “Interaction Editor” and “Interaction Debugger.”

Core Concepts: Understanding NeRF & Crafting Interactive Elements in Genie

Now, let's get into some core ideas that make Luma Genie tick. Understanding these will help you create much better interactive 3D scenes. Genie's interpretation of interaction prompts is constantly evolving. We've noticed that what works perfectly today might be refined tomorrow, so always test your interactions thoroughly.

The Magic of NeRF: From 2D Photos to 3D Reality

As introduced earlier, Neural Radiance Fields (NeRF) leverage AI to reconstruct 3D models from 2D images. Think of it like giving the AI multiple perspectives of the same point, allowing it to accurately triangulate and reconstruct the 3D position and color of every pixel. This technology is why good photos are so important. The quality of your input photos – things like overlap, consistent lighting, and varied angles – directly impacts NeRF's ability to reconstruct your scene accurately.

It's good to know that NeRF works best for static scenes. We've found it can struggle with highly reflective surfaces, transparent materials, or complex lighting conditions unless you're very careful with your capture process.

Defining Interactivity: How Genie Understands Your Intent

When you use Luma Genie, your text prompts are doing double duty. Your text prompts not only describe the scene's appearance but can also instruct Genie on what should be interactive and how it should behave. When prompting for interactions, we always advise being as specific as possible. Instead of “make it interactive,” try something like “when the blue sphere is clicked, make it glow yellow and slowly rotate.”

Genie looks for certain keywords to understand your interaction requests. For example, phrases like “when clicked,” “on approach,” “make X animate,” or “show information” can tell the AI what you want.

Building Blocks of Interaction: Hotspots, Triggers, and Actions

To build interactivity, we need to understand three key components: hotspots, triggers, and actions.

- A hotspot is a specific area or object in your 3D scene that you designate as interactive. You're basically telling Genie, “This part right here? We want users to be able to do something with it.”

- A trigger is the user input or event that activates an interaction. Common triggers include a mouse click, or a user's virtual proximity to an object.

- An action is what happens when a trigger occurs on a hotspot. This could be an object animating, text appearing, a sound playing, or many other things.

You'll use the Interaction Editor within Luma Genie, along with your prompts, to define these hotspots, choose their triggers, and assign the actions you want. It's a powerful combination.

Practice Exercise Suggestion: Think about a simple object in your room. If you were to make it interactive in a 3D scene, what would be the hotspot, what would be a trigger, and what action would you want to happen?

Your First Interactive 3D Scene: NeRF-Based Creation (Phone Capture Focus)

Alright, let's get hands-on and create your first interactive 3D scene using photos from your phone. This is where the theory meets practice. Our first successful NeRF scene was a lumpy garden gnome, and seeing it become interactive with a simple click was a magical ‘aha!' moment!

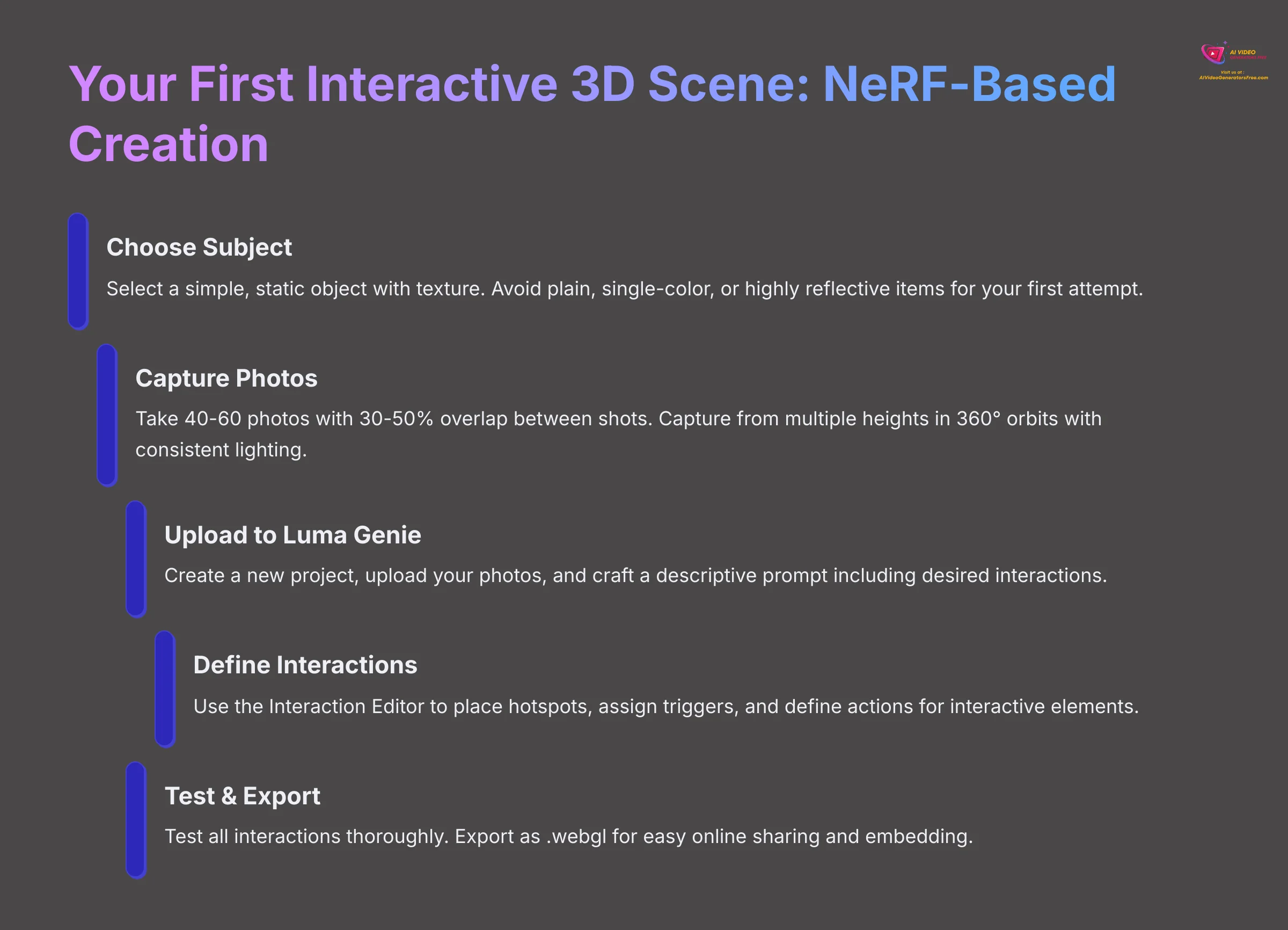

For your first try, just focus on understanding the workflow. Perfection comes later. Remember, insufficient photo overlap (less than 30%) or capturing from only one height are the most common reasons for poor NeRF results. Be meticulous during capture.

Part 1: Mastering Photo Capture for High-Quality NeRF

Good photos are the foundation of a good NeRF scene. For your very first NeRF capture, we suggest choosing a matte (non-shiny) object with some texture. Avoid plain, single-color, or highly reflective items, as they are harder for NeRF to reconstruct accurately.

Principles of Effective NeRF Capture (Recap & Reinforce)

Let's quickly go over the key principles:

- You need significant overlap between your photos. Aim for 30% or even more. Think of it like giving the AI multiple perspectives of the same point, allowing it to accurately triangulate and reconstruct the 3D position and color of every pixel.

- Capture from varied angles. Don't just shoot from one spot. Move around the object.

- Consistent lighting is very important. Avoid harsh shadows or light that changes during your shoot.

- Lock your phone's focus and exposure when possible. This prevents unwanted changes in your images.

- Your subject must be static. Any movement will confuse the NeRF process.

Step-by-Step Photo Capture Guide (Your First Subject: A Potted Plant)

Let's use a simple potted plant as our first subject.

- Choose Subject: Pick a plant or another simple, static object like a statue or a toy.

- Lighting: Set up consistent, even lighting around your plant. Try to avoid strong, direct light that creates dark shadows.

- Phone Settings: Open your smartphone camera app. Lock the focus and exposure when possible.

- Capture Path – Orbit 1 (e.g., Eye Level):

- Start at one point around the plant and take your first photo.

- Move slightly, perhaps 10-15 degrees around the object. Make sure your new view overlaps with the previous shot by about 30-50%. Take another photo.

- Continue circling the plant slowly, maintaining this overlap. Complete a full 360-degree orbit. You should aim for 20-30 photos for this first orbit.

- Capture Path – Orbit 2 (e.g., Lower Angle or Higher Angle):

- Now, change your camera height significantly. If your first orbit was at eye level, this one could be from a lower angle looking up, or a higher angle looking down at the plant.

- Repeat the 360-degree orbit with similar overlap. Plan for another 20-30 photos.

- Optional: If your plant has important features on top, you might capture a few extra shots from directly above.

- Review Photos: Quickly look through your photos. Check for blurriness or extreme lighting changes. Discard bad shots and retake them when necessary. Remember, a few extra minutes reviewing here can save you hours of reprocessing later! We've found that about 40-60 photos total is usually a good starting point for simple objects.

Part 2: From Photos to Initial Scene – Uploading & Generating with Genie

With your photos ready, it's time to bring them into Luma Genie. Processing times can vary based on the number of photos and server load, so be patient after clicking ‘Generate'.

- Login: Access your Luma Labs account and navigate to the Genie AI section.

- New Project: Select an option like “Create New 3D Scene.”

- Input Method: Choose “Image Upload” or “Photos” as your input type.

- Upload: Select and upload your entire photo series of the plant (e.g., your 40-60 photos).

- Initial Prompt: Now, craft a descriptive text prompt. For our plant, something like: “A vibrant green potted plant on a wooden table. When a leaf is clicked, display text ‘Photosynthesis in action!'” This tells Genie what the scene is and adds a basic interaction.

- Settings:

- Make sure an “Interactive Mode” or similar toggle is enabled. This is crucial.

- Choose initial render settings. “Detail Level” can start at medium. “Target Frame Rate” should be around 30 fps for smooth viewing.

- Generate: Click the “Generate” or “Create” button. Now, Genie will process your photos and prompt.

Part 3: Breathing Life into Your Scene – Adding & Refining Basic Interactivity

Once Genie finishes, your 3D scene will appear. When defining hotspots, starting with larger areas and then refining them is a good approach. The Interaction Debugger is your best friend for achieving precision.

- Preview: Navigate your newly generated 3D scene using your mouse or touch. Check the basic geometry and appearance.

- Open Interaction Editor: Find and open the “Interaction Editor” panel. This is where you'll define how users interact with your scene.

- Select Object/Area: Click on the part of your 3D model you want to make interactive. For our example, click on one of the plant's leaves. Genie might automatically suggest a hotspot, or you may need to manually create one.

- Define Hotspot: Adjust the size and position of the hotspot to accurately cover the leaf when needed.

- Assign Trigger: Check that the trigger is set to “On Click” or a similar option.

- Assign Action: Based on your prompt (“display text ‘Photosynthesis in action!'”), choose an action like “Show Text Pop-up.” Then, enter “Photosynthesis in action!” into the text field provided.

- Use Interaction Debugger: Activate the “Interaction Debugger.” This tool visually confirms the hotspot's active area and helps make sure it's correctly placed on the leaf. It's like an X-ray for your interactive bits.

- Test Interaction: In the preview window, click the leaf. The text “Photosynthesis in action!” should appear.

- Fine-tune: Adjust the hotspot placement or size if the interaction isn't triggering correctly.

- Bake Interactions: Once you're happy, click “Bake Interactions” (or “Apply,” “Save”). This step finalizes your interactive elements. Always ‘Bake' or ‘Apply' your interactions before exporting; otherwise, they might not be saved.

Part 4: Sharing Your Creation – Exporting Your First Interactive Scene

You've made an interactive 3D scene! Now let's share it.

- Select Export: Find the “Export” option, usually located in the scene editor.

- Choose Format: For easy online sharing and embedding, select

.webgl. This is often presented as “Embeddable Web Link” or “Shareable Link”. Other options like GLTF might also be available for use in other 3D software. - Review Settings: Check any output settings. Resolution isn't usually applicable here, and aspect ratio is often determined by the scene itself.

- Download/Get Link: Download the necessary files or copy the shareable link provided by Luma Genie.

Practice Exercise Suggestions:

- Capture your favorite coffee mug using the NeRF guidelines (at least 30 photos, 2 orbits). Generate the scene in Luma Genie. Make the mug display the text ‘My Favorite Mug!' when clicked. Export it as a shareable web link.

- Photograph a single shoe. Create an interactive scene where clicking the laces shows ‘Tie me!', and clicking the sole shows ‘Ready for a walk!'.

Text-to-Interactive 3D: Crafting Imaginative Scenes Purely from Prompts

Creating 3D scenes from photos is fantastic, but Luma Genie also lets you build worlds purely from words. This is where your imagination can really run wild. We once spent an hour refining a prompt for a “crystal cave that sings when you enter.” The initial results were just shiny rocks, but with careful wording about “translucent formations” and “harmonious resonance triggered by proximity,” it eventually came to life. Persistence with prompts definitely pays off!

Remember that Genie's ability to interpret complex interactions from text alone is a rapidly developing area. Some advanced interactions might still need tweaking in the Interaction Editor.

The Power of Words: Generating Scenes from Scratch

When you want to generate a scene entirely from a text description, the process starts similarly to a photo-based project, but with a crucial difference in input.

- New Project: Start a new project in Luma Genie AI.

- Input Method: This time, explicitly select “Text Prompt” as your input method.

- The Initial Prompt: This text box is your canvas. Everything about your scene – its look, feel, and interactivity – begins with what you type here.

Advanced Prompt Engineering for Text-to-Interactive 3D

Crafting a good text prompt is like giving an architect a detailed blueprint and then also telling them which parts of the building should come alive when touched. The clearer your instructions, the closer the AI gets to your vision.

Break down complex scenes into components in your prompt. Describe the environment first, then key objects, then their interactions. Use strong action verbs and descriptive adjectives. “The colossal stone golem lumbers forward when the ancient rune is touched” is much better than “Golem moves when rune clicked.”

- Describing Geometry & Scene Elements:

- Be specific. Don't just say “a tree.” Instead, try “an ancient, gnarled oak tree with mossy bark and sparse autumn leaves.”

- Think about scene composition. For instance: “A cozy medieval tavern interior with a large stone fireplace, wooden tables, and flickering candles on each table.”

- Set the atmosphere and lighting: “The tavern is dimly lit by the fireplace and candles, creating long shadows. Moonlight streams through a small, grimy window.”

- Articulating Interactive Elements & Behaviors within the Prompt:

- Give direct instructions: “Make the fireplace animate with roaring flames when a user approaches within 2 meters.“

- Define object-specific interactions: “The wooden chest in the corner should open when clicked, revealing a pile of gold coins.“

- You can even try conditional or chained interactions, though support depends on Genie's current capabilities: “When the ancient book on the lectern is clicked, its pages flip. After 3 seconds, a secret passage behind the bookshelf rumbles open.” (This is advanced, so test it.)

- Style and Aesthetics: Include terms to guide the visual style. Words like “photorealistic,” “cartoon style,” “watercolor effect,” or “cyberpunk aesthetic” make a big difference.

- Camera: You can sometimes suggest an initial camera position or view, such as “eye-level view,” or “looking down from above.”

Generating and Iteratively Refining Your Text-Based Scene

Iterative prompting is key. Your first text-generated scene might not be perfect. Treat it as a starting point and refine your prompts. Overly long or contradictory prompts can confuse the AI, so aim for clarity and logical consistency.

- Settings: Configure “Interactive Mode,” detail level, aspect ratio, and other settings as you did for photo-based scenes.

- Generate: Submit your detailed prompt and let Genie work its magic.

- Preview & Evaluate: Carefully examine the generated scene. Does it match your prompt? Are interactive elements present and working as expected?

- Refine with Prompts:

- Modify your original prompt. Add more detail, clarify ambiguities, or change elements that aren't quite right.

- Try follow-up prompts like “Change the table from wood to stone,” or “Make the candlelight flicker more intensely” if Genie supports conversational refinement.

- Using Interaction Editor for Text-Generated Scenes: Even when you've defined interactions in your prompt, you may still need the Interaction Editor. We often use it to fine-tune hotspot placement or adjust parameters when the AI's interpretation isn't perfect.

Exporting Your Text-Generated Interactive Scene

Once you're happy with your scene and its interactions, the export process is the same as for NeRF-based scenes. Find the “Export” option and choose your desired format, like .webgl for sharing.

Practice Exercise Suggestions:

- Write a detailed prompt to generate ‘a futuristic cityscape at night with flying vehicles. Make one of the skyscrapers' rooftop antenna blink red when clicked.' Generate and refine it in Luma Genie.

- Prompt Luma Genie to create ‘a magical forest clearing with a glowing mushroom. When the mushroom is clicked, it emits sparkling particles and a text pop-up says “Fairy Dust!”.' Try to achieve this result using only prompts for both visuals and interaction.

Mastering Interactivity: Advanced Animations, Hotspots & Chained Events

We've covered basic interactions, but Luma Genie can do much more. Let's explore how to add animations, manage complex hotspots, and even create sequences of events. We once built a mini-escape room teaser in Genie. Getting the sequence of “find key → unlock chest → reveal clue” to work felt like solving a puzzle itself! Meticulous planning of each interaction step was necessary.

Remember, complex chained interactions can become difficult to manage if Genie's tools aren't specifically designed for advanced logic. Start simple and build complexity gradually.

Beyond Clicks: Implementing Animated Interactions

Animations can bring your scenes to life. For animations, we've found that ‘less is often more.' Subtle animations can feel more polished than overly exaggerated ones. Focus on smooth easing.

- Load Scene: Start with an existing 3D scene you've created, either from photos or text.

- Identify Target: Choose an object you want to animate. This could be a door, a lever, a character, or anything else.

- Prompting for Animation (during initial generation or refinement):

- You can try to define animations in your prompts. For example: “When the lever is clicked, make it pull down with a smooth animation.”

- Another example: “Make the character wave its hand when the user approaches.”

- Using the Interaction Editor for Animations:

- Select the object, define its hotspot, and choose the trigger (like “On Click” or “On Approach”).

- In the Action settings, look for “Animation” or “Animated Response” options.

- Luma Genie might offer pre-built animations (e.g., open, close, rotate, move). Or, it might allow custom animation parameters like duration, easing curves, or target position/rotation. This depends heavily on Luma's current editor capabilities.

- Specify animation details. When prompting, you might say “make the door swing open slowly over 2 seconds.”

- Test and Refine: Preview the animation. Use the editor controls or refine your prompt to adjust speed, timing, or smoothness until it looks right.

Precision with Hotspots: Advanced Placement & Sizing

Getting hotspots just right is key, especially on complex objects.

- Complex Shapes: For objects that aren't simple squares or spheres, you'll need to be strategic about placing hotspots. The goal is to make them feel natural and accurate.

- Multiple Hotspots: You can often apply different interactions to different parts of the same object. For example, a control panel might have several buttons, each with its own hotspot and action.

- Layering/Occlusion: Be aware of how overlapping hotspots or geometry might affect triggering. An object in front of another might block its hotspot. The Interaction Debugger is your best friend here for diagnosing these issues.

- Fine-tuning with Numeric Inputs: Luma Genie's editor might allow you to directly input coordinates or dimensions for hotspots for very precise placement when available.

Storytelling with Interactions: Designing Chained Events

Chained interactions, where one action triggers another, can create really engaging experiences. For example, Interaction A triggers Interaction B, or achieving State A enables Interaction B. When chaining interactions, test each link in the chain independently before testing the whole sequence. This makes debugging much easier.

Performance can also be a factor with many simultaneous animations. Optimize your scene geometry when animations seem sluggish.

- Concept: First, understand what a chained interaction is. It's a sequence: something happens, which then allows or causes something else to happen.

- Via Advanced Prompting (Conceptual/If Supported):

- You can try to describe sequential logic in your prompts. Example: “First, click the red button; it lights up. Once the red button is lit, the nearby panel becomes clickable. Clicking the panel reveals a hidden compartment.” How well Genie interprets this depends on its AI capabilities.

- Via Interaction Editor (If Supported by Luma Genie's logic system):

- Look for state-based triggers. Can an interaction be set to trigger only after another object is in a specific state (e.g., “Door can only be opened after Key_Hotspot has been clicked”)?

- Check for event listeners or broadcasters. Can one interaction send out a signal that another interaction listens for?

- Are there options for time-based delays within sequences (e.g., “Click box → lid opens → (wait 3 seconds) → light inside turns on”)?

- A note here: The exact steps for complex logic are very dependent on Luma Genie's specific features. Our tutorial will reflect current capabilities. If direct chaining tools are limited, think creatively: can an animation reveal a new hotspot that was previously hidden?

- Planning Chained Interactions:

- We recommend sketching out complex interaction sequences, maybe with a simple flowchart.

- Identify the different states your objects will go through and the transitions between them.

- Thorough Testing with Interaction Debugger: This is absolutely crucial for verifying each step in a chained interaction.

Practice Exercise Suggestions:

- Take your ‘potted plant' scene. Modify the interaction so that when a leaf is clicked, it subtly sways back and forth for 2 seconds (animation) and then a text pop-up ‘Feeling the breeze!' appears.

- Design a two-step interaction: Create a scene with a closed box and a separate button. First interaction: clicking the button makes it glow. Second interaction (only active after the button glows): clicking the box makes its lid open. (Adapt this based on Genie's actual capabilities for chaining).

Refining Your Vision: Scene Quality Enhancement & Performance Optimization

Creating the initial scene is just the start. Now, we need to polish it to make it look its best and run smoothly. We once spent hours trying to perfect a NeRF capture of a complex sculpture. The ‘Refine' tool saved us from a complete reshoot by allowing us to target fix just a few problem areas where the lighting had been tricky.

Over-refining can sometimes introduce new, unexpected artifacts when not guided carefully. Focus refinement on specific problem areas.

Polishing Your Gem: Using the ‘Refine' Tool for Scene Improvement

Luma Genie often includes a ‘Refine' tool or similar functionality to improve your scenes. When using ‘Refine' for NeRF, we've found that sometimes providing a few more well-chosen photos of the problematic area and then refining can be more effective than just relying on the tool with existing images.

- When to Use ‘Refine': Use this tool when you see issues like blurry areas in your scene, floating artifacts (bits of geometry that don't belong), or missing geometry in NeRF scenes. For text-to-3D scenes, you might use it when certain elements are undesirable.

- Accessing ‘Refine': Look for this tool or function in the Genie interface. It might be a button or an option in a menu.

- Refining NeRF-based Scenes:

- The tool might let you re-select source images, it might guide you on how to indicate problematic areas that correspond to specific photos you uploaded.

- It might involve brushing or masking directly in the 3D view, you'll learn how to select the area in the 3D view that needs to be reprocessed.

- Refining Text-to-3D Scenes:

- This usually involves re-prompting with more specific details for the area you want to change. For example: “Refine the texture on the stone wall to be more weathered and mossy.”

- Iterative Refinement: Understand that refinement might take a few tries. Don't expect perfection on the first attempt.

Decoding Render Settings: Balancing Fidelity and Fluidity

Render settings control how your final scene looks and performs. For render settings, we always prioritize a smooth frame rate for interactive content. Laggy interactions are frustrating, even when the scene looks beautiful in a still frame. Test performance on a mobile device when your scene will be viewed primarily on mobile.

- Overview of Key Settings:

- Detail Level / Quality: Options like Low, Medium, High will impact geometry detail and texture resolution.

- Ray Tracing: When available (On/Off), think of this like adding Hollywood-level lighting to your scene. It provides incredibly realistic lighting, reflections, and shadows, making everything look ‘wow!' But, like a blockbuster movie, it comes at a high performance cost. Use it judiciously for final renders.

- Target Frame Rate (FPS): Settings like 30 or 60 fps are important for smooth interaction and animation.

- Resolution: This is more for video outputs but can sometimes affect interactive scene texture quality.

- Other settings might include Anti-aliasing or Ambient Occlusion, when Genie offers them.

- Impact on Visuals: Higher settings generally improve realism, making scenes look more detailed and lifelike.

- Impact on Performance: Higher settings also increase processing time and can make real-time interaction laggy, especially on less powerful devices.

- Balancing Act: The key is to find the right compromise between visual quality and smooth performance for your specific project.

Strategic Optimization Workflow

Here's a workflow we recommend for balancing quality and performance:

- Develop with Lower Settings: During the initial creation phase, when you're setting up interactions and iterating on your design, use lower quality or detail settings. This allows for faster previews and a more responsive editor.

- Test Key Interactions: Make sure your core interactions work smoothly even when visual settings are low.

- Increase for Final Output: Only switch to high-quality render settings when you are ready for the final export or to showcase your scene.

- Consider End-User Experience: If you're embedding your scene on a webpage, remember that overly demanding settings might lead to poor performance for users with less powerful computers or mobile devices.

Practice Exercise Suggestions:

- Take a NeRF scene that has some minor blurriness or a small floating artifact. Experiment with the ‘Refine' tool to try and fix it. Document what steps you took.

- Render a simple interactive scene twice: once with the lowest possible quality settings and once with the highest. Compare the visual difference and, if possible, note any difference in editor responsiveness or export time.

Troubleshooting Common Issues & Performance Bottlenecks

Even with the best tools, you'll sometimes run into bumps. Knowing how to troubleshoot common problems is a key skill.

Our experience shows the vast majority of NeRF quality issues stem from the photo capture phase. Remember: garbage in, garbage out! So invest time in good capture. For hotspot issues, think like the AI: is the hotspot clearly distinguishable? Is it occluded? The Debugger is your X-ray vision here.

And for performance, iterate fast with low settings. Don't waste time on high-quality renders until your core design and interactions are locked in. Always save your project frequently!

Issue 1: My NeRF Scene Has Blurry Objects or Floating Artifacts!

- Description: Parts of your 3D scene created from photos appear out-of-focus, strangely shaped, or seem to “float” where they shouldn't.

- Likely Causes: Common culprits are insufficient photo overlap, poor or inconsistent lighting during capture, movement of the subject while you were taking photos, focus issues with your camera, or simply not enough photos for complex areas.

- Solutions (Checklist): Re-examine source photos, Improve Photo Capture (increase overlap, add more angles, ensure no movement, lock focus), Utilize Genie's ‘Refine' Function, and Simplify Background.

Issue 2: The Depth in My 3D Scene Feels Flat or Warped!

- Description: Your generated 3D scene doesn't have a good sense of three-dimensional depth, or it appears unnaturally distorted or stretched.

- Likely Causes: This often happens when photos were taken from only one height level, there wasn't enough parallax (difference in viewpoint), or you didn't capture enough varied angles.

- Solutions (Checklist): Use Multi-Height Capture (at least two distinct height levels), Vary Angles More (move in, out, up, and down), and Confirm Sufficient Overlap (30%+).

Issue 3: My Interactive Hotspots Aren't Triggering or Are Misaligned!

- Description: Clickable elements or proximity-based interactions in your scene don't work as expected, or they seem to be active in the wrong 3D space. We once spent half a day on this, only to find via the Interaction Debugger that an invisible piece of geometry was blocking our button!

- Likely Causes: Incorrect hotspot placement or sizing, occlusion by other geometry (sometimes invisible!), an issue with how the trigger or action was defined, or simply forgetting to “bake” the interactions.

- Solutions (Checklist): Activate “Interaction Debugger”, Adjust in “Interaction Editor”, Check for Occlusion, Review Trigger & Action Settings, Refine Prompt, and Confirm Interactions are “Baked”.

Issue 4: Luma Genie is So Slow! Long Rendering or Browser Hangs.

- Description: Scene generation or refinement takes an excessively long time. Or, your web browser becomes unresponsive or even crashes.

- Likely Causes: This can be due to a very complex scene, a high number of input photos, using very high-resolution images, demanding render settings (like ray tracing), insufficient local system resources (RAM, CPU, GPU), or browser-specific issues.

- Solutions (Checklist): Reduce Scene Complexity, Optimize Input Images, Lower Render Settings During Iteration, free up System Resources, check Browser Health, use the Luma Desktop App for large projects, utilize Cloud Rendering, and be patient.

Practice Exercise Suggestions:

- Intentionally take a ‘bad' set of photos for NeRF (e.g., very few photos, minimal overlap, inconsistent lighting of a simple object). Generate the scene. Then, using the troubleshooting steps from this section, try to identify at least two key issues with the result. Re-capture the photos correctly and regenerate the scene to observe the improvement.

Project-Based Implementation: Bringing Interactive 3D Scenes to Life (Real-World Use Cases)

Now that you've learned the core skills, let's see how to apply them to some real-world projects. Our team at ‘AI Video Generators Free' prototyped an interactive showcase for a new software feature using Luma Genie. Being able to click on different UI elements in the 3D scene to get more information was incredibly helpful for internal presentations before we even built the real UI.

For real-world client projects, always clarify the scope of interactivity upfront. What seems simple can become complex to implement perfectly. Also, confirm you have rights to use any objects or locations you capture for commercial NeRF projects.

Use Case 1: Interactive E-commerce Product Showcase (360° View with Feature Hotspots)

- Goal: Create an immersive 360° view of a product, like a sneaker or a gadget. Add clickable hotspots that reveal key features or benefits to potential customers.

- Steps Overview:

- Preparation & Capture (NeRF):

- Place your product on a clean, simple turntable when possible. A DIY spinning plate works fine. Consistent lighting from all angles is paramount for 360° views. Using a light tent can be very helpful for smaller products.

- Capture 40-60+ photos with high overlap. Rotate the product (or move your camera around it) smoothly. Capture from at least two heights to get top and bottom details when they are important. Remember to lock your phone's focus.

- Upload & Initial Prompt (Luma Genie):

- Upload your photos. An example prompt could be: “Interactive 360 view of ‘Awesome Sneaker X'. Make the sole clickable to show ‘Advanced Grip Tech'. Make the logo on the side clickable to show ‘Brand Story'.“

- Make sure “Interactive Mode” is on.

- Refine Scene & Interactions:

- Generate the scene. Check that the 360° view is clean and the product looks good.

- Use the Interaction Editor to precisely place hotspots on the sole, logo, or other features.

- For the Action, link these hotspots to “Show Text” or “Show Image” (when Luma supports image pop-ups for interactions). Keep hotspot text concise and impactful for e-commerce.

- Test all interactions thoroughly. Use the ‘Refine' tool when any geometry issues arise from the NeRF process.

- Export: Export your scene as

.webglfor easy embedding into an e-commerce platform product page.

- Professional Tip: Keep hotspot text concise and impactful.

Use Case 2: Mini Virtual Real Estate Tour (Two Connected Rooms)

- Goal: Create a simple interactive walkthrough of two connected rooms, perhaps a living room and kitchen. Users should be able to navigate between them and click on items for information.

- Steps Overview:

- Capture (NeRF – Room by Room or careful continuous capture):

- Methodically photograph Room 1 from multiple viewpoints. Get shots from the center of the room, from the corners, and make sure good coverage of all walls, features, and importantly, the doorway leading to Room 2. When capturing rooms, pay special attention to doorways and connecting spaces to make navigation feel natural. Overlapping shots through doorways are key.

- Repeat this process for Room 2, including capturing the doorway back to Room 1. Try to maintain consistent lighting between the rooms when possible. Consider capturing each room as a separate NeRF project for easier management when that works, then linking them.

- Upload & Initial Prompts (Luma Genie):

- Upload your photos (per room if you captured them separately). An example prompt for Room 1: “Living room interior view. Make the doorway to the kitchen clickable for navigation. Add an info spot on the fireplace that says ‘Original 1920s Fireplace' when clicked.“

- Refine Scene(s) & Link Interactions:

- Generate your scene(s).

- In the Interaction Editor:

- Place a navigation hotspot on the doorway in Room 1. For the action, choose something like “Go to Scene/View [Room 2]” (when Luma supports direct scene linking) OR “Change Camera View to [a preset view you define that looks into Room 2]”.

- Repeat this for the doorway in Room 2, linking it back to Room 1.

- Add informational hotspots (e.g., on the fireplace, kitchen appliances) with “Show Text” actions. For info pop-ups, consider using images alongside text when Luma's interactivity allows, for example, showing a close-up of an appliance feature.

- Use the Interaction Debugger to test the flow and make sure navigation works smoothly.

- Export: Export as

.webglfor embedding on a property listing website or virtual tour platform.

- Professional Tip: Focus on clear navigation paths.

Use Case 3: Educational Interactive Artifact (Museum Exhibit Style)

- Goal: Present a historical artifact, like an ancient vase or a tool, with interactive annotations for educational purposes. This can be created from photos of a real object or generated via text-to-3D.

- Steps Overview:

- Source Asset (NeRF or Text-to-3D):

- NeRF: Capture detailed, all-around photos when you have access to a real artifact and permission to photograph it.

- Text-to-3D: Otherwise, prompt Luma Genie to create a specific artifact. For example: “Photorealistic ancient Greek amphora with red-figure painting depicting a chariot race.”

- Upload/Generate & Initial Prompt (Luma Genie):

- Upload photos when using NeRF, or just use your text prompt. Add interaction ideas to your prompt: “Make the chariot painting clickable to explain ‘This scene illustrates the ancient red-figure pottery technique'. Make the handles clickable to show ‘These handles were used for carrying wine or oil'.“

- Refine Scene & Add Detailed Annotations:

- Generate the scene.

- Use the Interaction Editor to place multiple hotspots on different parts of the artifact (e.g., the main painting, the handles, the base, the rim).

- For the Action, link each hotspot to detailed text descriptions using “Show Text”. Explore whether Luma interactions can link to external URLs for even more information. For educational content, accuracy is key. Double-check historical facts for your annotations.

- Make sure users can easily rotate and zoom in on the artifact for closer inspection. This is usually standard Luma navigation.

- Export: Export as

.webglfor use in an online course, a museum website, or a virtual exhibit.

- Professional Tip: Break down complex information into digestible chunks for each hotspot.

Practice Exercise Suggestions:

- (E-commerce) Create an interactive 360° view of your smartphone or a favorite gadget. Add at least two clickable hotspots detailing its specific features.

- (Real Estate) Create an interactive tour of one room in your home, focusing on making at least three distinct features (e.g., a window, a sofa, a piece of art) interactive with informational pop-ups.

- (Education) Choose an everyday object (e.g., a stapler, a pair of scissors). Create an interactive ‘exploded view' concept where clicking different parts explains their function.

Advanced Workflows: Integrating Luma Genie with Blender, Unity & Exploring APIs

Once you're comfortable with Luma Genie, you might want to take your creations further. Luma Genie can be a powerful part of a larger professional 3D pipeline. We once used Luma Genie to rapidly prototype 20 different environment concepts for a game. While the final assets were rebuilt by artists, the ability to quickly visualize and interact with these AI-generated scenes via the API saved weeks of manual concepting.

Remember, API access may be part of Luma AI's paid tiers or have usage limits; check their current terms.

Expanding Your Toolkit: Complementary Software Roles

Several other software tools can complement Luma Genie:

- Blender: This free, open-source 3D software is incredibly versatile. We use it for:

- Mesh Optimization: You can perform retopology (rebuilding the mesh structure for efficiency) or polygon reduction (decimation) to make assets game-ready.

- UV Unwrapping: Fixing or improving UV maps is necessary for applying textures correctly.

- Advanced Texturing/Shading: You can apply complex PBR (Physically Based Rendering) materials or bake textures in Blender.

- Scene Assembly: Combine Luma assets with other 3D models to create larger scenes.

- Unity/Unreal Engine: These are industry-standard game engines. You can use Luma assets in them for:

- Game Development: As props, environments, or even character bases (after optimization).

- Architectural Visualization: Create interactive walkthroughs with advanced lighting and effects.

- AR/VR Experiences: Bring your Luma scenes into immersive augmented or virtual reality environments.

- Note on Luma's native interactions: It's important to understand that Luma Genie's specific interactive elements (like its hotspots and actions) might not directly transfer to game engines. You'll likely need to re-script those interactions using the engine's native tools (e.g., C# in Unity, Blueprints in Unreal Engine).

- Image Editors (Photoshop, GIMP): These are useful for pre-processing your NeRF capture images. You can perform color correction or masking when absolutely necessary, though we try to get it right in camera.

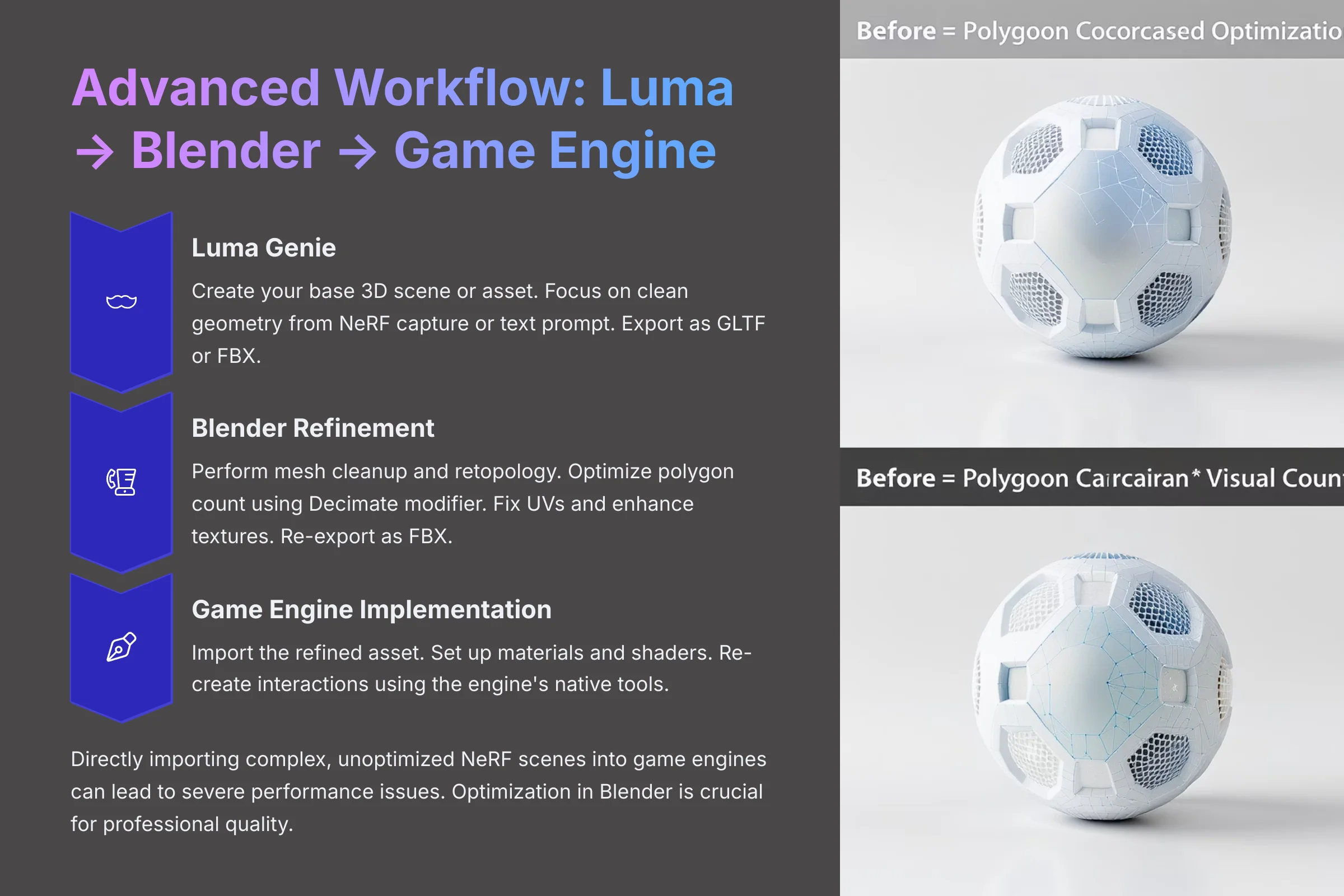

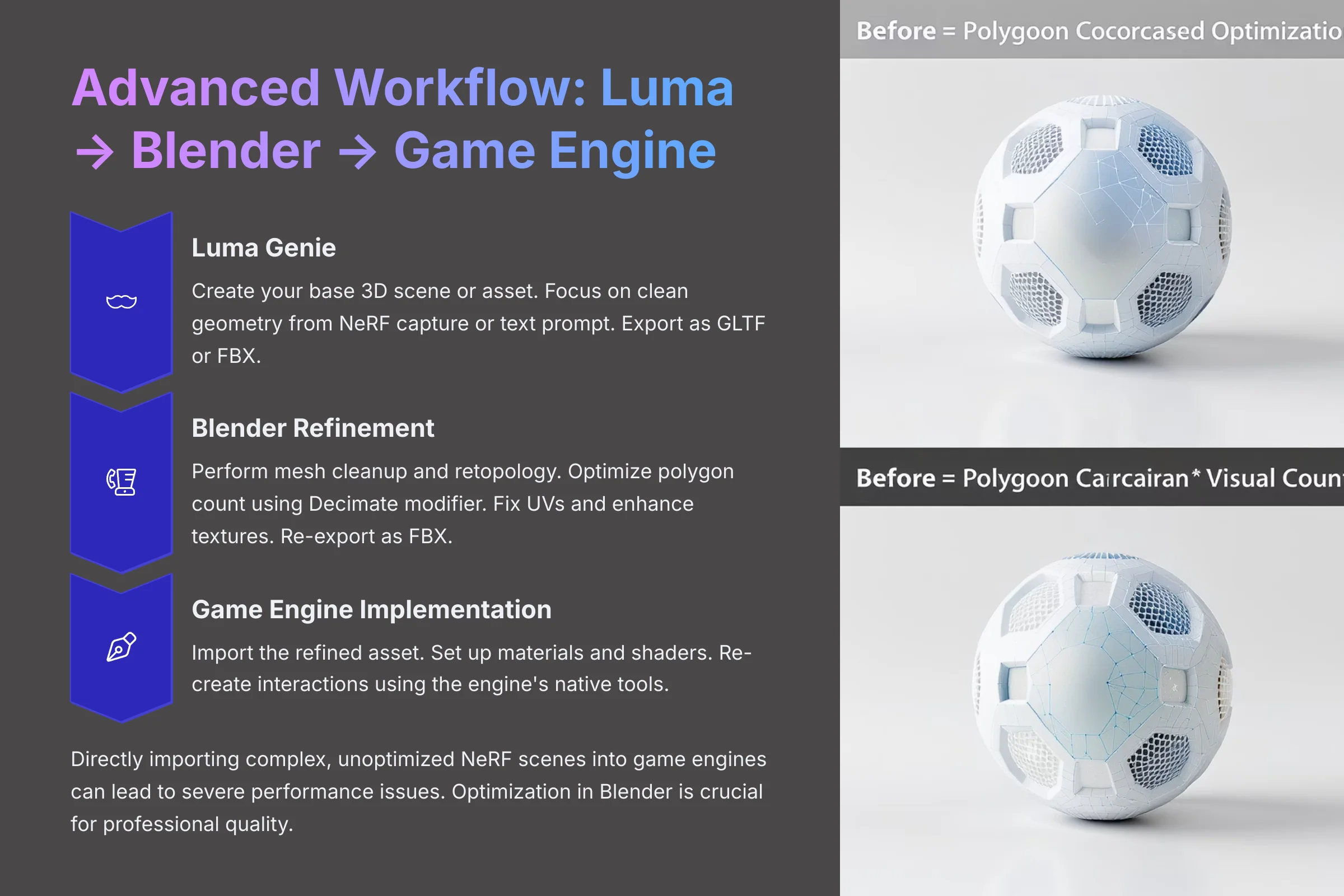

Workflow Example: Luma Genie → Blender → Unity/Unreal Engine

Here's a common advanced workflow we've seen:

- Luma Genie: Create your base 3D scene or asset. Focus on getting clean geometry from your NeRF capture or text prompt. Export it in a format like GLTF or FBX. When exporting for Blender or game engines, GLTF is often a good starting point as it's designed for transmitting 3D scenes.

- Blender (Refinement):

- Import the GLTF or FBX file from Luma.

- Perform mesh cleanup. This might involve retopology (e.g., using tools like Quad Remesher when you have it, or manual tools). This step is crucial when you're aiming for professional quality.

- Optimize the polygon count using Blender's Decimate modifier. Directly importing complex, unoptimized NeRF scenes into game engines can lead to severe performance issues.

- Check and fix UVs to make sure textures apply correctly.

- Optionally, you can enhance textures or bake new PBR materials onto your model.

- Re-export the refined asset as an FBX, which is very game-engine friendly.

- Unity/Unreal Engine (Implementation):

- Import the refined FBX asset from Blender into your game engine project.

- Set up materials and shaders within the engine to make your asset look its best.

- Re-create or script the desired interactions. As mentioned, Luma's built-in interactivity usually doesn't carry over, so you'll build that logic natively in the engine.

Automation Power: Batch Generation with the Luma API (Conceptual Overview)

Luma also offers an API (Application Programming Interface) which allows for programmatic control over Genie. This is more for developers or those comfortable with coding.

- Introduce the Luma API: Explain simply that it allows you to control Genie using code.

- Use Cases: Imagine generating hundreds of product variants for an e-commerce site, where each variant is created from a database entry linked to specific prompts or images. Or, large-scale virtual world content creation, or automated scene generation for data visualization.

- Requirements: This typically requires coding knowledge, perhaps in Python or JavaScript. For API use, we always suggest starting with simple ‘hello world' type requests to understand authentication and basic calls before attempting complex batch jobs.

- Link to Official API Documentation: Direct users to Luma's official resources for developers when they want to explore this.

Pre & Post-Processing Best Practices for Optimal Results

Good preparation and a bit of post-work can make a huge difference.

- Pre-Processing (Images for NeRF – Recap & Augment):

- Lighting Consistency: Vitally important. Avoid mixed lighting when you can (e.g., daylight from a window mixed with tungsten room lights).

- Sharpness & Focus: Every single image must be sharp and in focus.

- Image Format & Quality: Use high-quality JPEGs (saved at a level of 8-10) or PNGs. Lower quality images can lead to compressed artifacts that NeRF will unfortunately interpret as real geometry!

- No Motion Blur: Make sure there's no motion blur in your photos, either from camera shake or from your subject moving.

- Post-Processing (Exported Models for External Tools):

- Mesh Optimization (Recap): Always think about polygon count, confirming normals are correct, and removing any loose or unnecessary geometry.

- Texture Atlas/Baking: For game engines, it's often best to consolidate multiple materials and textures into efficient texture atlases.

- Collision Meshes: In Blender, you can create simplified collision geometry for your objects, which is necessary for physics in game engines.

- LODs (Levels of Detail): Imagine looking at a detailed statue up close versus seeing it from a mile away. When it's far away, you don't need all the tiny details, right? LODs do this for your 3D models. For optimal performance in game engines, you create multiple versions of your model with varying polygon counts. The game engine then smartly switches to simpler versions when the object is far away, saving precious processing power.

Practice Exercise Suggestions:

- Generate a simple static 3D object in Luma Genie (e.g., a rock). Export it as GLTF. Download and install Blender (it's free). Try importing the GLTF file into Blender and navigating the 3D view to inspect its mesh and textures. Can you find out how many polygons it has?

- (Conceptual) Visit the Luma AI website and try to find their API documentation. Skim the overview to understand what kinds of operations are possible via the API.

Now that you understand how to integrate Luma Genie into professional pipelines, it's time to truly unleash your imagination. Let's look at how to push the creative limits of Luma Genie and explore some unconventional uses.

Pushing Creative Boundaries: Expert Tips & Unconventional Luma Genie Uses

Once you've mastered the basics and some advanced workflows, the real fun begins – pushing the creative limits of Luma Genie. We saw a Luma Genie artist create an interactive scene where the ‘floor' was a swirling galaxy texture that subtly changed based on where the user ‘looked'. It was done with clever prompting for texture animation and wasn't a standard feature, showcasing real creativity.

Experimental features or unconventional uses might be less stable or produce unpredictable results, so save often!

The Art of the Prompt: Evocative Language for Unique Interactions & Styles

Your prompts are your paintbrush and your director's megaphone. Don't be afraid to break Genie's expectations with prompts. Some of the most interesting results we've seen come from happy accidents during experimental prompting.

- Abstract & Conceptual Prompts: Encourage experimentation beyond just literal descriptions. Try prompts like, “A scene where memories materialize as shimmering particles when the user walks through designated zones,” or “An object that reacts to the idea of being touched, changing color before physical contact is made in the scene.”

- Narrative Prompting: Think about building a micro-story or an emotional arc through a sequence of prompted interactions.

- Combining Styles: Try prompting for hybrid visual styles. For example, “A sculpture in the style of Picasso rendered with the texture of rusted metal, make it slowly deconstruct when approached.”

Creative Hack: Short Video Loops as Animated Textures (Conceptual/Advanced)

This is a more experimental idea. The concept is to use short, seamlessly looping videos (like flowing water, digital static, flickering fire, or even animated UI elements) as textures on surfaces within your Luma scenes.

- Concept: Imagine a TV screen in your Luma scene actually playing a news report, or a virtual fireplace with realistic animated flames.

- Possible Methods (Be clear about current tool capabilities):

- NeRF Capture of a Screen: One way to achieve this is to physically capture an object or surface that has a screen playing the looping video integrated into it. For example, photograph a tablet that's part of your scene, with the video playing on its screen.

- External Tool Integration: When Luma Genie itself doesn't directly support applying video textures to arbitrary meshes, this would likely be a post-Luma process. You'd export your Luma model to Blender or Unity and apply the video texture there.

- Prompting for Video-Like Effects (when Genie supports): Can you prompt Genie for “a TV screen displaying a looping news report” and get a convincing simulated video texture? It's worth experimenting to see whether the AI can interpret this and create an animated effect.

Genius Workflow: Productivity Tips from Luma Experts

Working efficiently lets you create more. Here are a few tips:

- Save Scene Templates/Starters: For project types you do often (like a standard product showcase setup or a base room for virtual tours), save your settings, lighting, or basic interaction setups as a starting point. Use Luma's formal template feature when it has one. Otherwise, you can manually replicate a saved project.

- Master Keyboard Shortcuts (Reiterate & Expand): We mentioned

Tab(switch tools),Ctrl+Z(undo), andCtrl+S(save project frequently!). Other useful ones might involve navigation. We'd suggest keeping a quick-reference list of your top 5-7 shortcuts. - Strategic Use of Cloud Rendering: When Luma offers a cloud rendering queue, use it for your final high-quality renders. This frees up your machine so you can start new scene design, further prompt experimentation, or testing alternative interaction flows.

- Organized Asset Management: Keep your NeRF photo sets, your text prompts, and your exported scenes well-organized in folders on your computer. This saves a lot of headaches later.

Your Lifelong Luma Journey: Resources for Continuous Learning & Inspiration

The world of AI and 3D is always changing. The best way to stay current is to connect with the community and keep learning. Share your creations with the Luma community! Feedback is invaluable, and you might inspire others. Always respect copyright and terms of service when using external assets or capturing content.

- Luma AI Official Channels: The first place to look is the Luma Labs official website. They usually have documentation, tutorials, and a blog.

- Community Power: Join the Luma AI Discord server when there is one, or any community forums they host. This is where users share tips, creations, and troubleshoot together.

- Social Media: Follow Luma AI on platforms like X (Twitter), YouTube, or Instagram. They often showcase new features and user creations.

- Broader 3D/AI Art Communities: Look at sites like Sketchfab and ArtStation. Relevant subreddits or other AI art Discord servers can also be great sources of inspiration and show you how interactive 3D is being used across different fields.

Practice Exercise Suggestions:

- Try to create an interactive scene based on an abstract concept or emotion (e.g., ‘a representation of curiosity' where clicking different elements reveals surprising new paths or information). Focus on creative prompting.

- When you have a short, looping video (e.g., a screen recording of a simple animation, or a stock video of fire/water), try to incorporate it into a NeRF capture by playing it on a tablet or monitor that is part of your scene. See how well Luma Genie captures this ‘animated texture'.

Conclusion: Embark on Your Interactive 3D Adventure with Luma Genie

We've covered a lot of ground together in this Luma Tutorial: How to Create Interactive 3D Scenes with Genie AI. From understanding the basics of setting up your account, capturing photos for NeRF, and crafting simple interactions, we've moved through to more advanced techniques. You've learned about text-to-3D prompting, creating animations, refining scene quality, and even integrating Luma Genie into professional pipelines.

The real power of Luma Genie, as we see it, is its unique ability to help you create genuinely interactive 3D experiences with relative ease. It opens up so many possibilities for creators in all sorts of fields. But like any powerful tool, mastery comes from consistent use and experimentation.

The field of AI-generated content is evolving rapidly. Keep learning, keep experimenting, and don't be afraid to push the boundaries of what's currently possible. From simple interactive objects to complex narrative environments, Luma Genie has opened up new creative avenues for us at ‘AI Video Generators Free'. We're excited to see what you'll build!

Now it's your turn! Fire up Luma Genie, and start building your very own interactive 3D worlds. Don't keep them to yourself – share your incredible creations with the Luma AI community and let's explore these limitless possibilities together! Learn from others and get inspired by amazing projects showcased in the Luma AI community. Remember, each creation is an opportunity to discover new techniques, so don’t hesitate to experiment with different ideas and styles. Embrace your creativity and become part of the vibrant ecosystem of imagination with countless Luma Usecase examples waiting to be explored!

Practice Exercise Suggestion: Outline your next three Luma Genie projects. What will you create? What new technique from this tutorial will you focus on mastering for each?

Disclaimer: The information about Luma Tutorial: How to Create Interactive 3D Scenes with Genie AI presented in this article reflects our thorough analysis as of 2025. Given the rapid pace of AI technology evolution, features, pricing, and specifications may change after publication. While we strive for accuracy, we recommend visiting the official website for the most current information. Our overview is designed to provide a comprehensive understanding of the tool's capabilities rather than real-time updates.

We hope this detailed guide helps you on your journey. For more insights and tutorials on AI video and 3D tools, feel free to explore more content on AI Video Generators Free.

And when you want to revisit this specific guide, you can find it here: Luma Tutorial: How to Create Interactive 3D Scenes with Genie AI.

Leave a Reply