Unsure Which AI Video Generator to Choose?

This 2-Minute Quiz Reveals Your Perfect Match!

Key Takeaways

- AI Video Generation Basics: Transform text descriptions into dynamic video content using sophisticated machine learning models trained on massive datasets

- Leading Platforms: Runway Gen-3, OpenAI's Sora, and Pika Labs dominate the market with unique capabilities and specializations

- Current Limitations: Video length restrictions (4-60 seconds), character consistency challenges, and text rendering difficulties

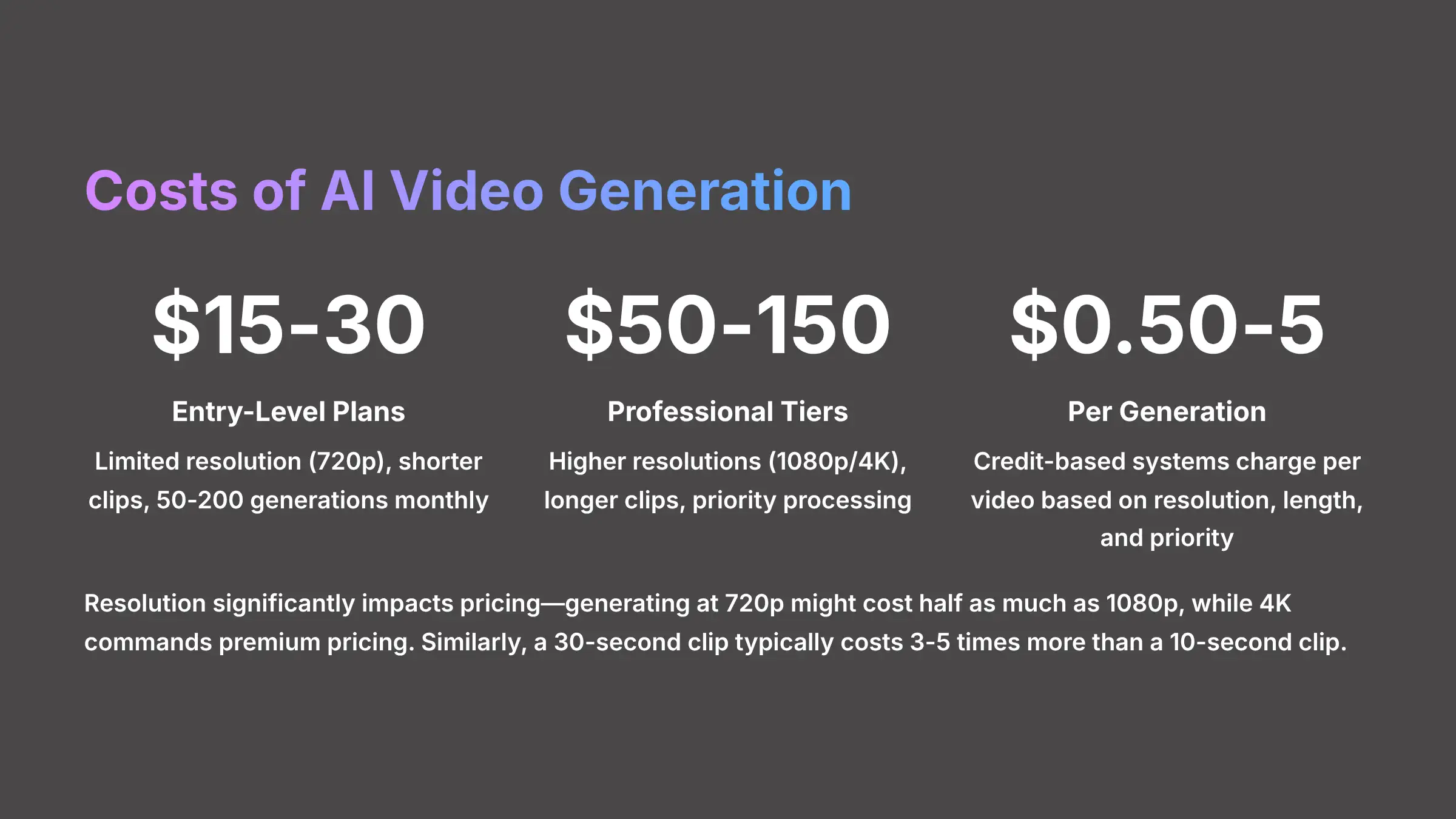

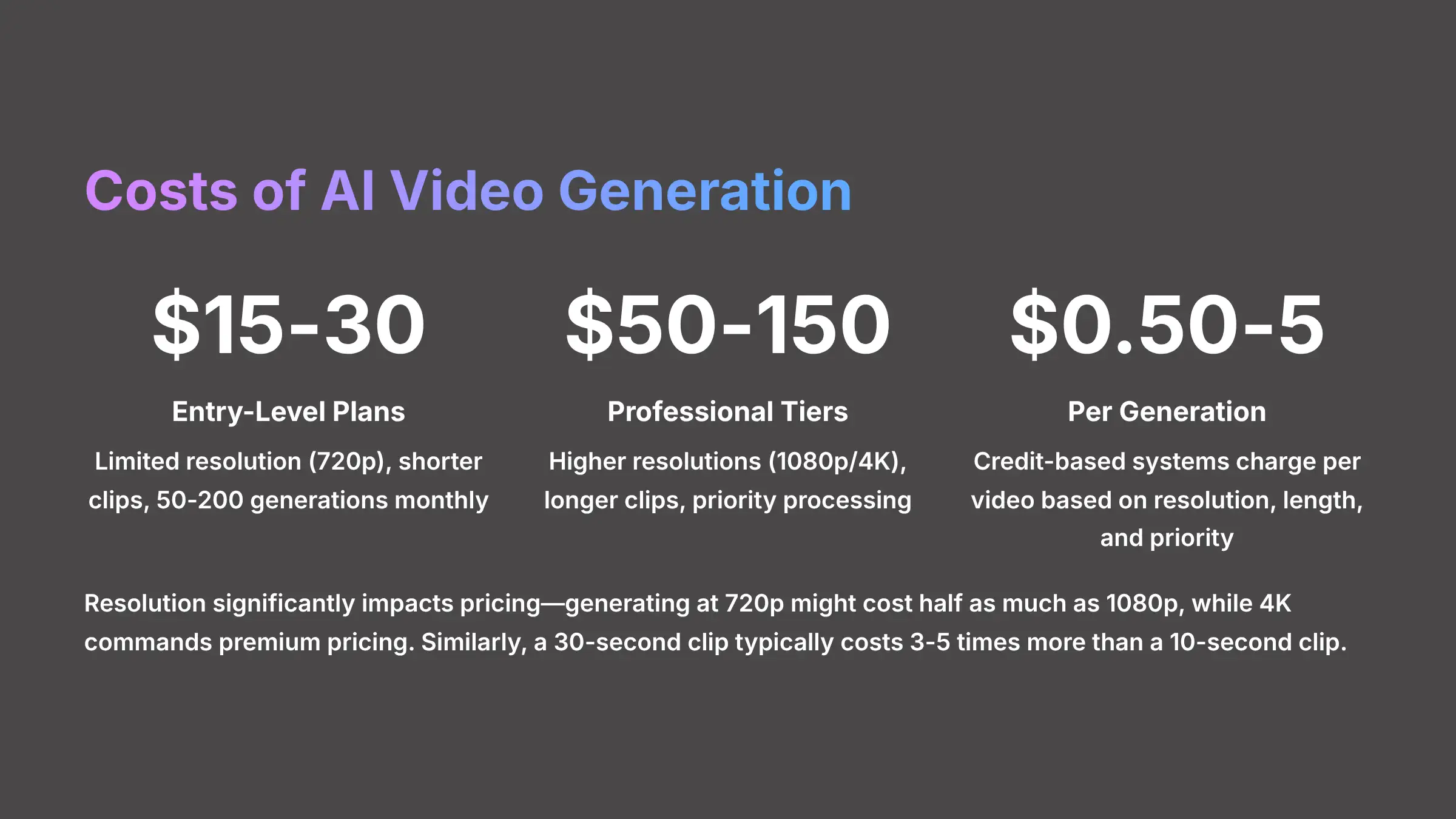

- Cost Structure: Subscription-based models ranging from $15-150 monthly with additional costs for premium features and higher resolutions

- Future Evolution: Extended generation lengths, improved character consistency, and interactive editing capabilities coming in 2025

Welcome, my friend, to the ultimate guide answering your MagicLight FAQs: Common Questions and Answers about the incredible world of AI video generation! I'm Samson Howles, and I've spent years immersed in these amazing AI video tools here at AI Video Generators Free. We're going to explore every burning question you might have about making videos with artificial intelligence.

This guide will walk you through how AI video works, what powerful platforms are out there, and how you can actually start creating your own stunning content. We'll dive into the real capabilities and even talk about the costs involved, helping you make smart decisions for your digital content creation journey. It's truly fascinating, what we can do now.

You'll find practical insights, helping you navigate the exciting landscape of FAQs AI Video with confidence. I promise you'll walk away with a crystal-clear understanding. For comprehensive insights into platform capabilities, explore our detailed MagicLight Overview that covers technical specifications and performance analysis.

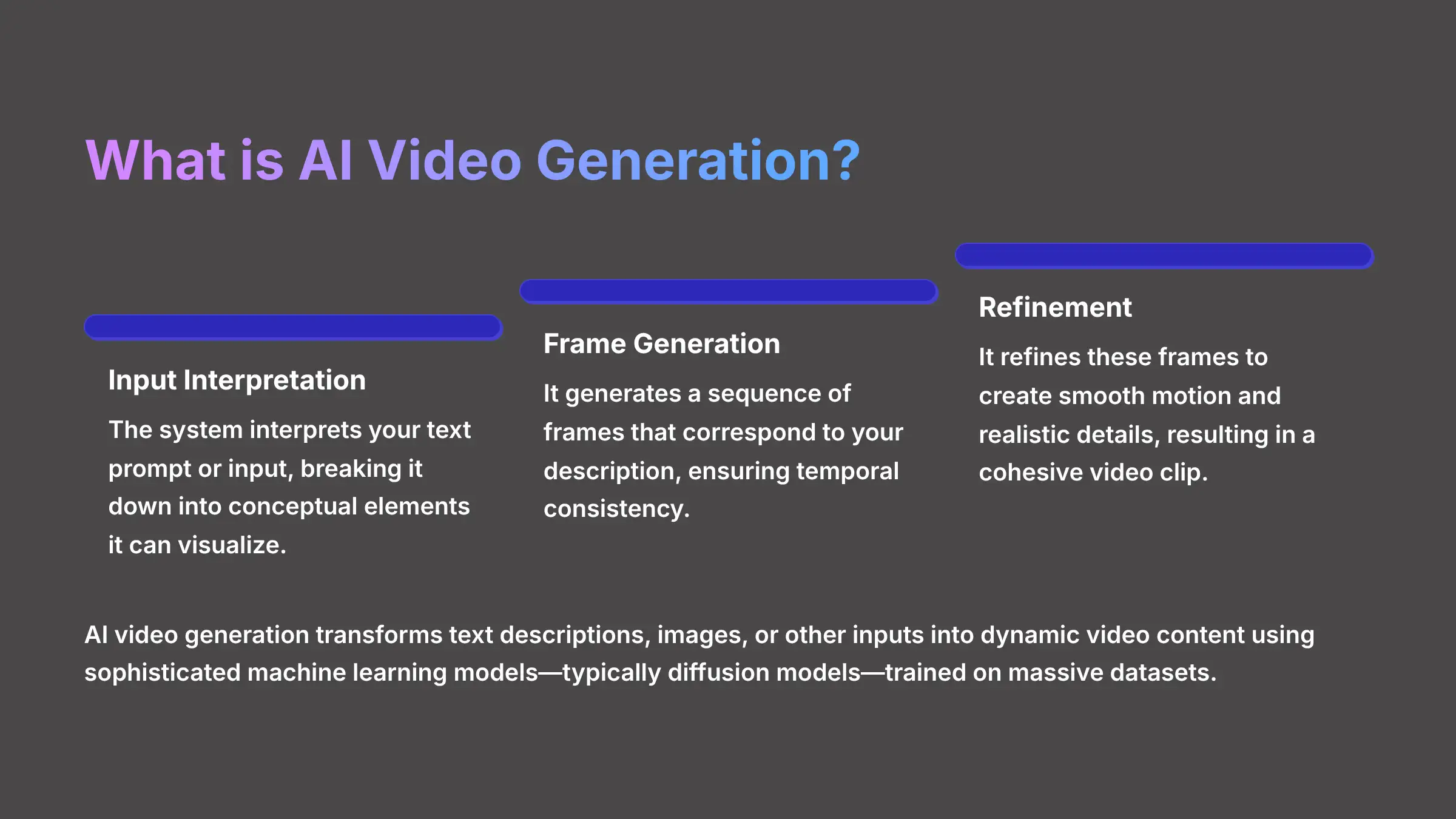

MagicLight FAQs: What is AI video generation and how does it work?

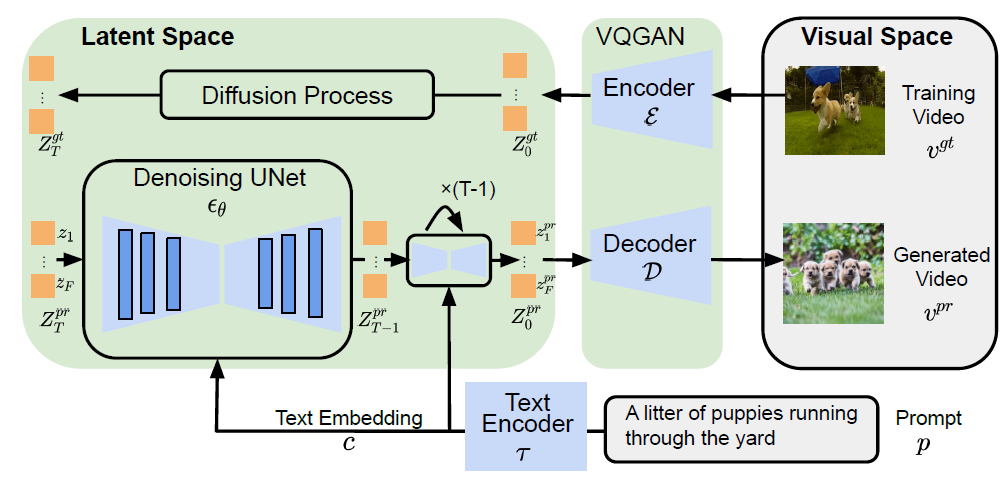

AI video generation is a cutting-edge technology that transforms text descriptions, images, or other inputs into dynamic video content using artificial intelligence algorithms. These systems employ sophisticated machine learning models—typically diffusion models—that have been trained on massive datasets of video and image content to understand the relationship between text descriptions and visual outputs.

The process typically works in three key stages. First, the system interprets your text prompt or input, breaking it down into conceptual elements it can visualize. Second, it generates a sequence of frames that correspond to your description, ensuring temporal consistency between frames. Finally, it refines these frames to create smooth motion and realistic details, resulting in a cohesive video clip.

Modern AI video generators use a combination of technologies including transformer architectures (similar to those in large language models), diffusion models (which gradually denoise random patterns into coherent images), and motion prediction algorithms. What makes these tools particularly powerful is their ability to understand complex concepts, visual styles, camera movements, and even physical interactions between objects—all from simple text descriptions.

The most advanced systems can maintain consistency in character appearance, understand cinematic techniques like panning or zooming, and even generate specific visual styles ranging from photorealistic footage to stylized animation. While early AI video tools could only generate a few frames, today's leading platforms can create clips ranging from 4 to 60 seconds with impressive visual quality and coherence.

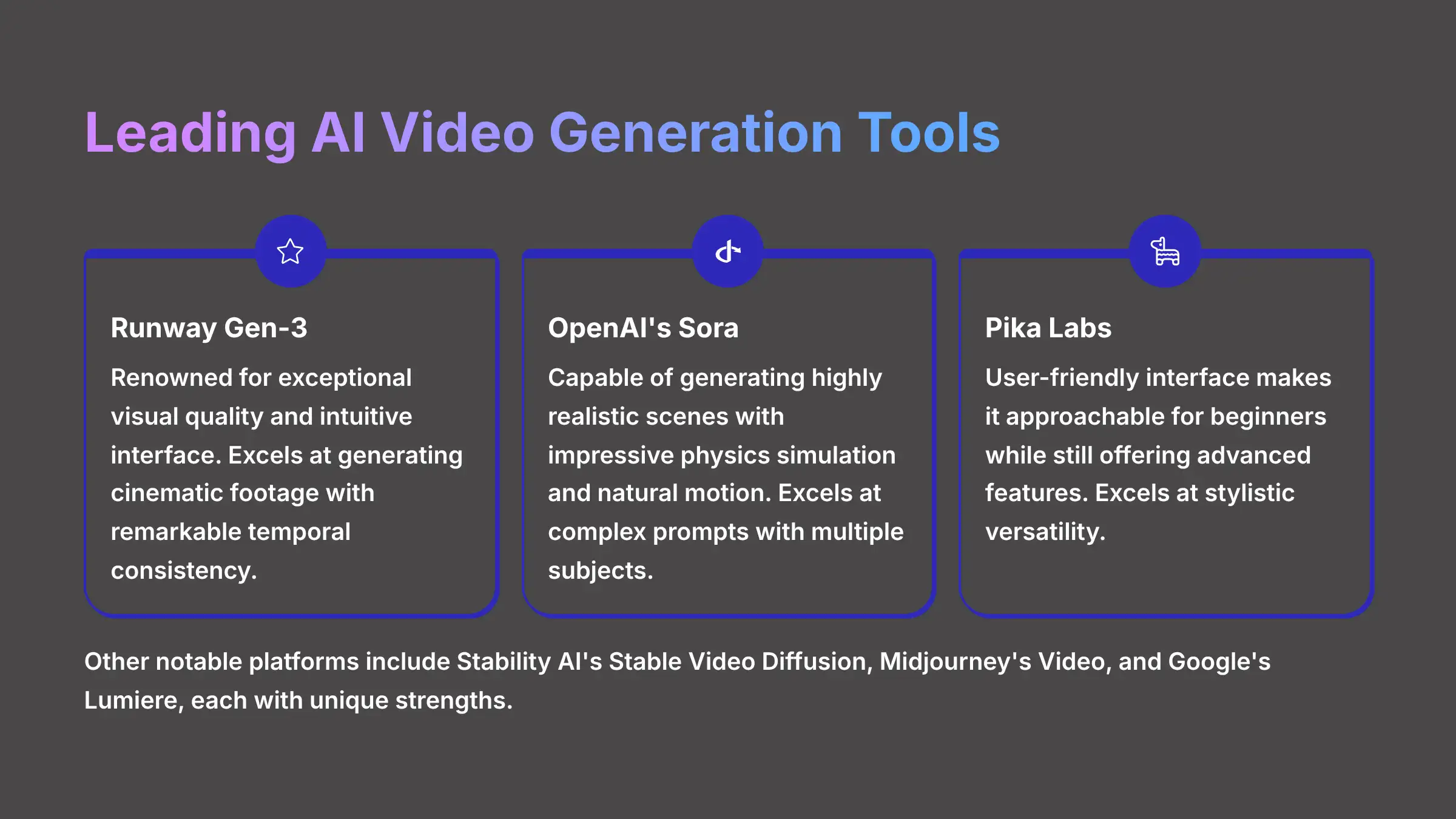

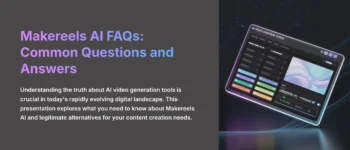

MagicLight FAQs: What are the leading AI video generation tools available today?

The AI video generation landscape has evolved rapidly, with several powerful tools dominating the market in 2025. Each offers unique capabilities and specializations worth understanding before making a choice for your projects.

Runway Gen-3

Industry leader for cinematic quality

- Exceptional visual quality and temporal consistency

- Intuitive interface for professional workflows

- Specialized modes for different creative needs

- Integration with professional video editing tools

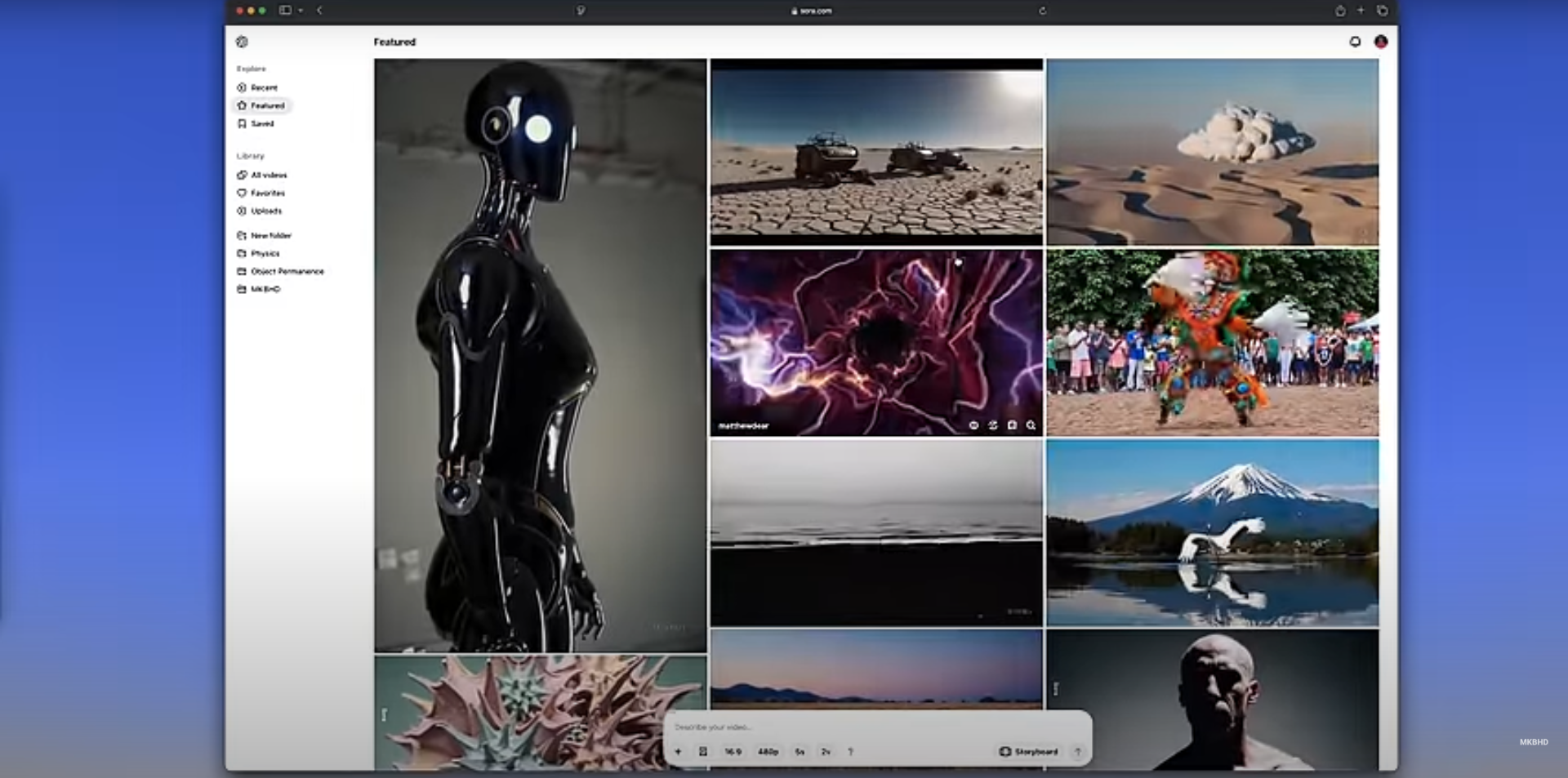

OpenAI's Sora

Breakthrough platform with physics simulation

- Highly realistic scene generation

- Impressive physics simulation capabilities

- Complex prompt understanding

- Natural motion and spatial relationships

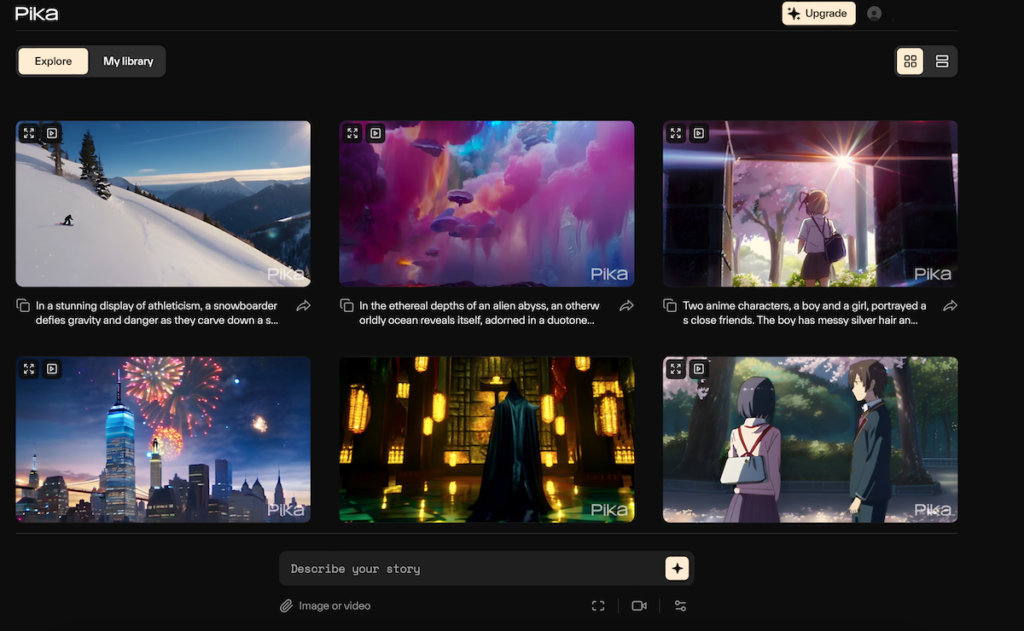

Pika Labs

Perfect balance of accessibility and quality

- User-friendly interface for beginners

- Advanced features for experienced creators

- Versatile stylistic options

- Smooth transition between styles

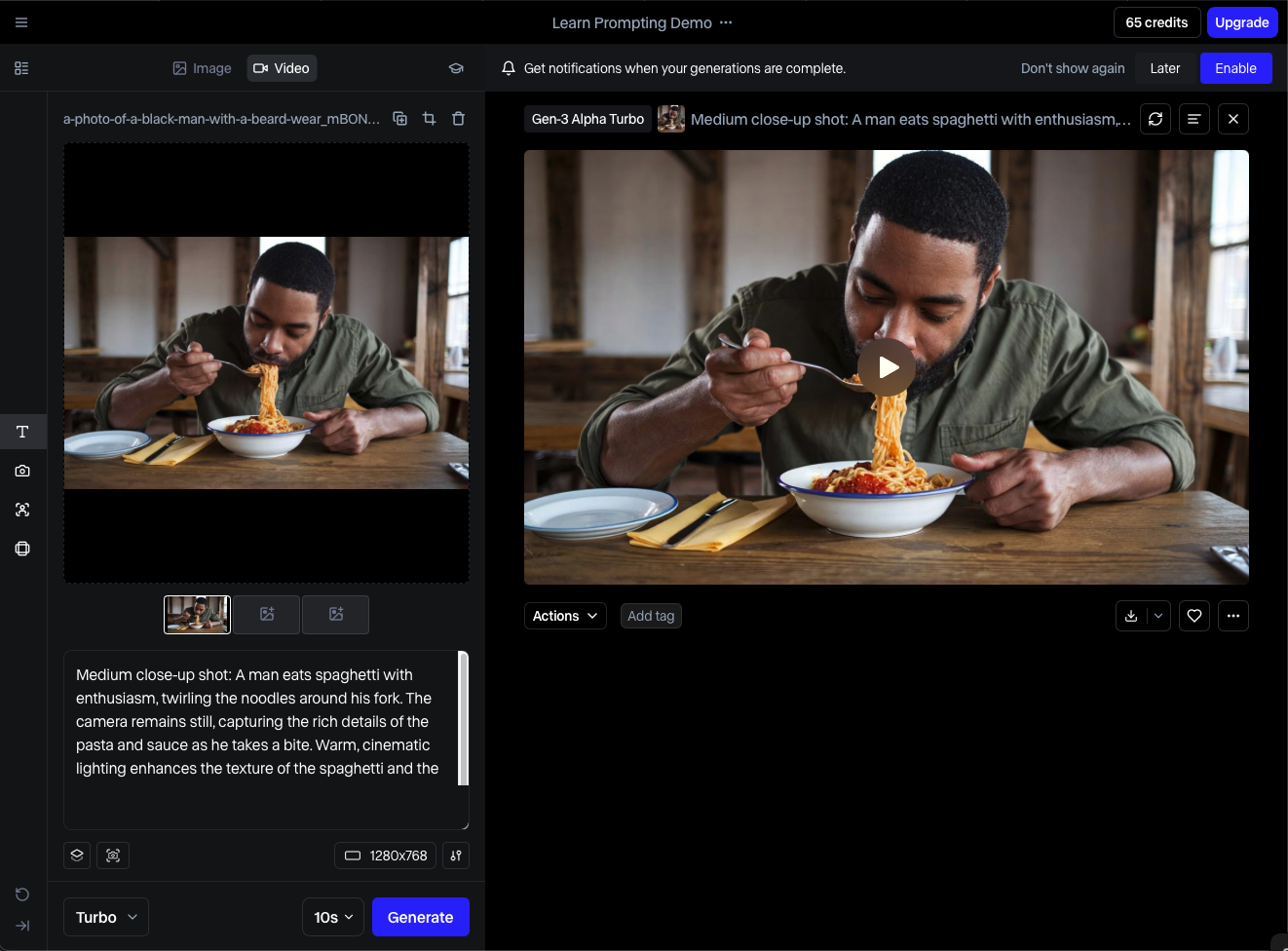

Runway Gen-3 stands as one of the industry leaders, renowned for its exceptional visual quality and intuitive interface. It excels at generating cinematic footage with remarkable temporal consistency and offers specialized modes for different creative needs. Creators particularly value its ability to maintain visual coherence throughout clips and its integration with professional video editing workflows.

OpenAI's Sora represents another breakthrough platform, capable of generating highly realistic scenes with impressive physics simulation and natural motion. Its strength lies in understanding complex prompts and producing videos that follow intricate instructions with multiple subjects and actions. Sora's ability to comprehend spatial relationships and generate convincing human movements has made it particularly valuable for storytelling applications.

Pika Labs has carved out a significant niche with its balance of accessibility and quality. Its user-friendly interface makes it approachable for beginners while still offering advanced features for experienced creators. Pika excels at stylistic versatility, allowing users to easily switch between photorealistic, animated, and artistic rendering styles.

Other notable platforms include Stability AI's Stable Video Diffusion, which specializes in transforming still images into motion, and Midjourney's Video, which leverages the company's distinctive aesthetic approach for moving content. Google's Lumiere has also gained traction for its exceptional text-to-video coherence and innovative motion handling.

Each platform offers different generation lengths (typically 4-60 seconds), resolution options, and pricing models, making the choice highly dependent on your specific creative needs and budget constraints. For detailed comparisons of these platforms, check out our comprehensive Best MagicLight Alternatives analysis.

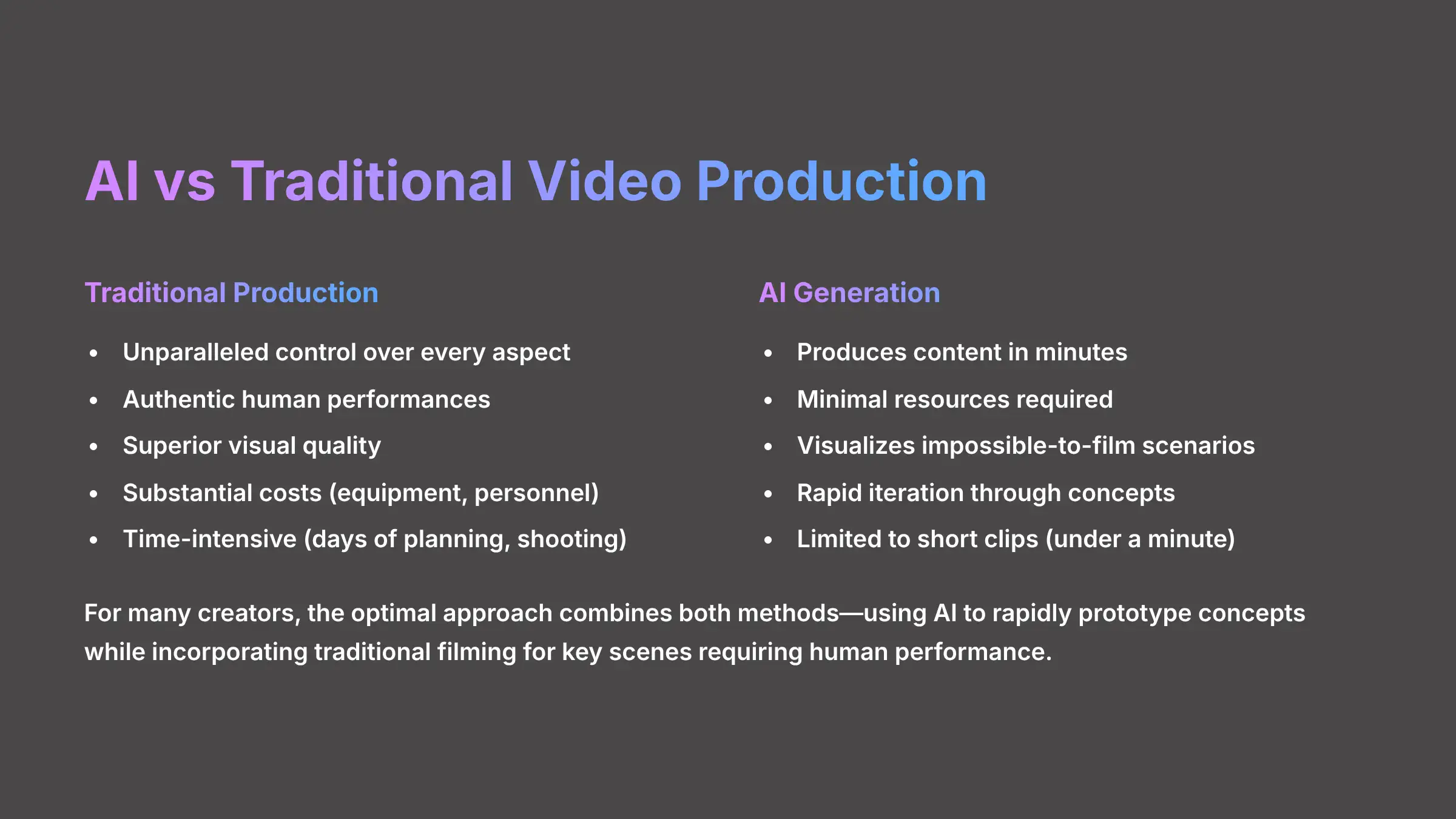

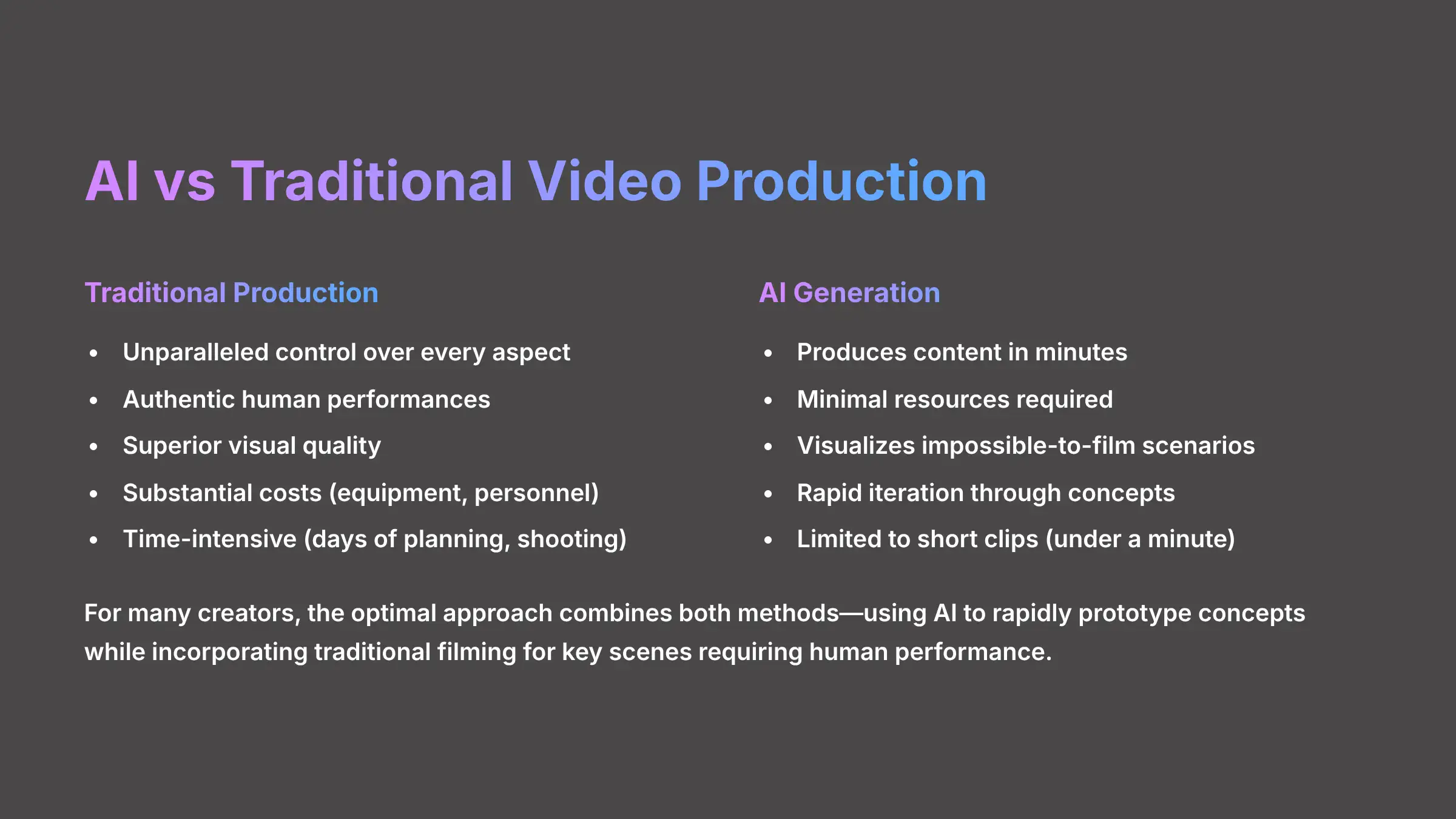

MagicLight FAQs: How do AI video generators compare to traditional video production?

AI video generation and traditional video production represent fundamentally different approaches to content creation, each with distinct advantages and limitations that creators should understand before choosing their production method.

Traditional video production involves physical cameras, real locations or sets, human actors, and a crew of specialists handling everything from lighting to sound. This process offers unparalleled control over every aspect of the final product and can achieve visual quality that AI hasn't yet matched consistently. The human element—authentic performances, director's vision, and collaborative creativity—remains a significant advantage. However, traditional production comes with substantial costs in equipment, personnel, locations, and time. Even simple productions typically require days of planning, shooting, and editing.

AI video generation, by contrast, can produce content in minutes with minimal resources. You need only a computer, internet connection, and subscription to an AI video platform. This dramatically reduces the barrier to entry for video creation. AI excels at quickly visualizing concepts, generating impossible-to-film scenarios (like fantasy environments), and iterating through multiple creative directions rapidly. The technology is particularly valuable for prototyping, conceptualization, and projects with limited budgets.

However, AI-generated videos still face significant limitations. Most platforms can only generate short clips (typically under a minute), struggle with complex narratives, and may produce inconsistencies in character appearance or physics. Fine details like realistic human faces, hands, and text remain challenging, and AI-generated content often has a distinctive “look” that trained eyes can identify.

For many creators, the optimal approach combines both methods—using AI to rapidly prototype concepts, generate difficult-to-film elements, or create background content, while incorporating traditional filming for key scenes requiring human performance or precise control. Our MagicLight Usecase guide demonstrates how AI can enhance traditional cinematography with advanced lighting effects.

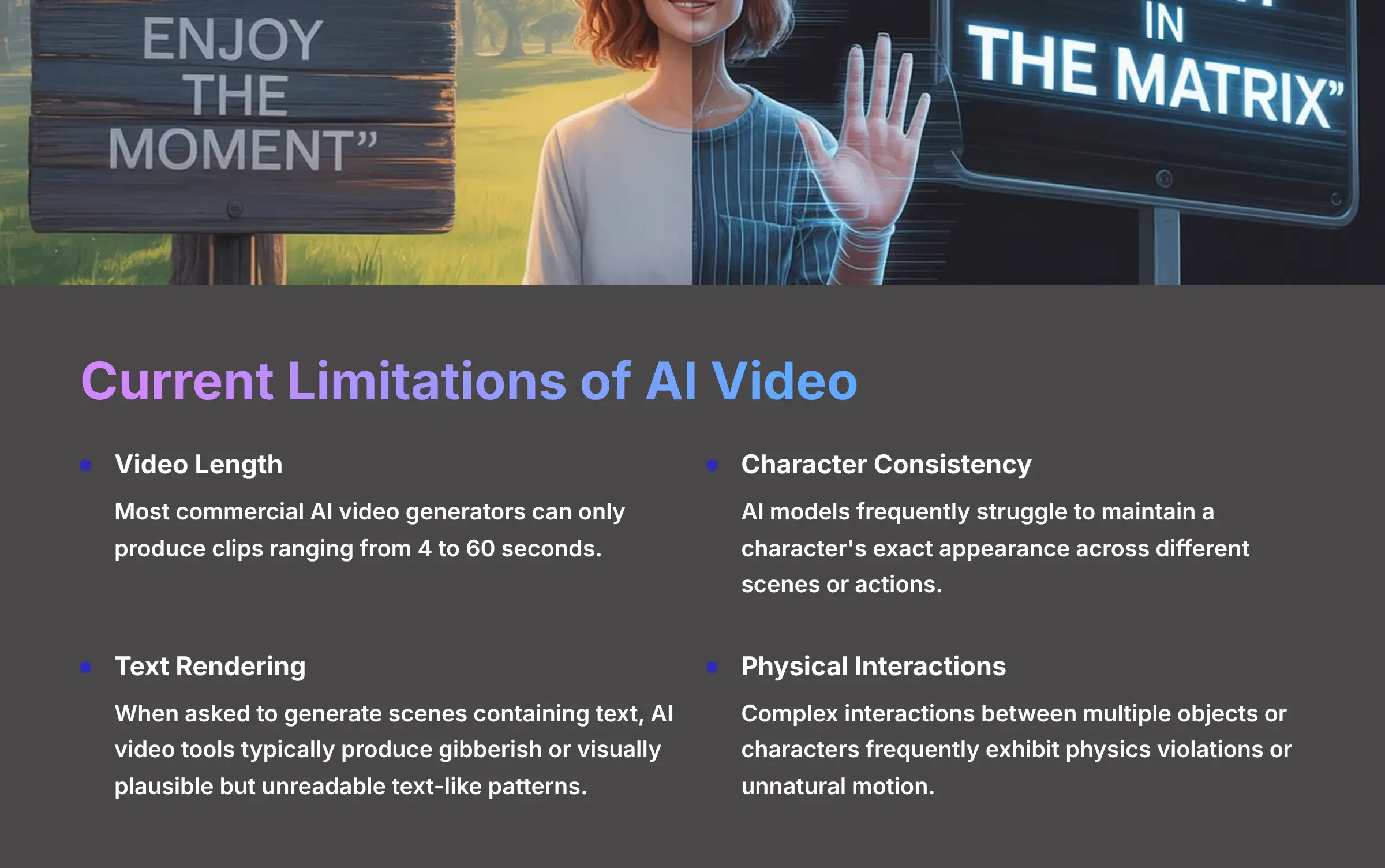

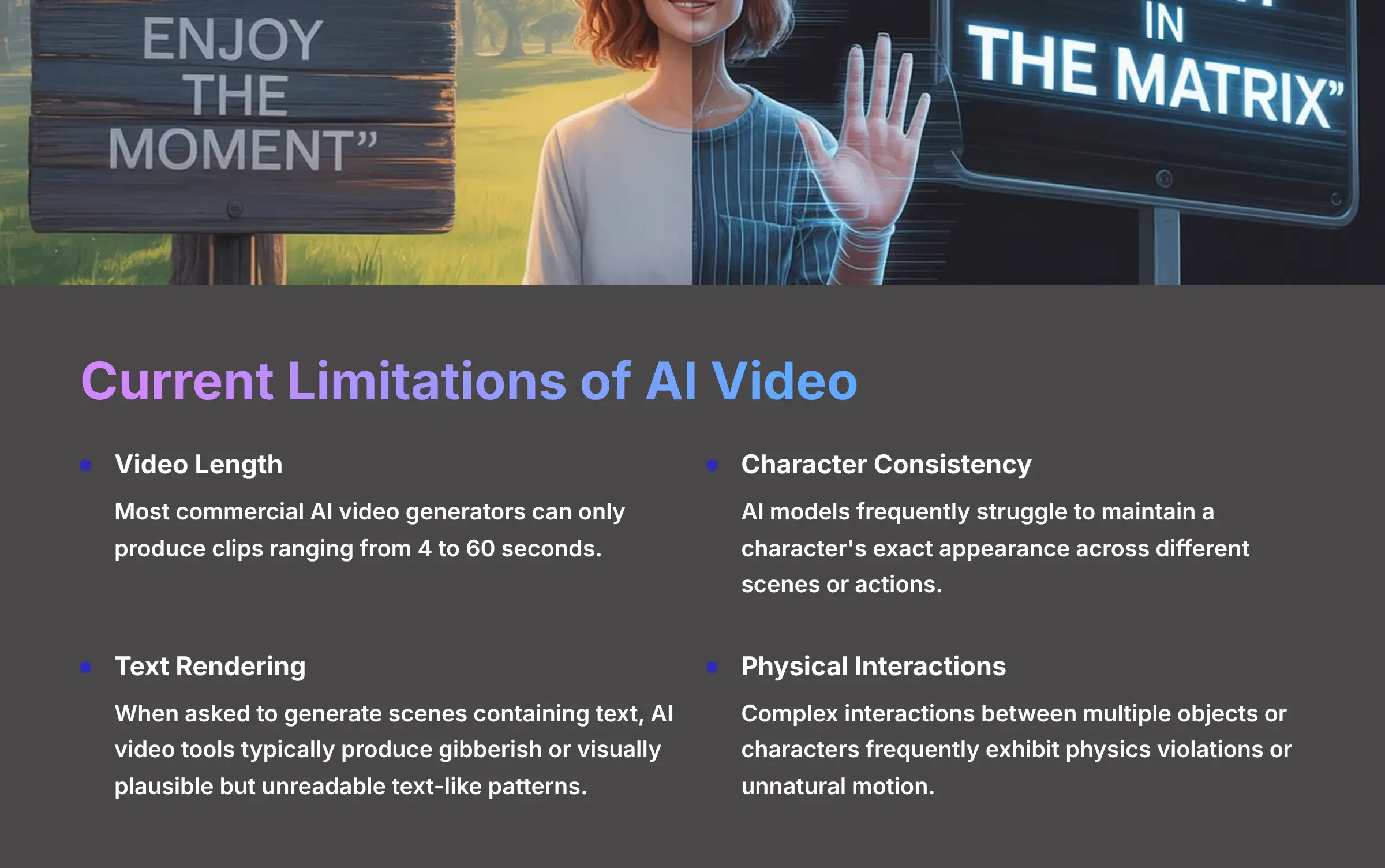

MagicLight FAQs: What are the current limitations of AI video generation?

Despite remarkable advances, AI video generation technology still faces several significant limitations that creators should be aware of when planning projects. Understanding these constraints helps set realistic expectations and develop effective workflows.

The most obvious limitation is video length. While marketing materials sometimes suggest otherwise, most commercial AI video generators can only produce clips ranging from 4 to 60 seconds. Creating longer content requires stitching together multiple generations, which often results in consistency issues between segments. This constraint stems from the computational complexity of maintaining temporal coherence over extended sequences.

Character consistency presents another major challenge. AI models frequently struggle to maintain a character's exact appearance across different scenes or actions. Facial features, clothing details, and even body proportions may subtly shift throughout a generated clip. This “identity drift” becomes more pronounced in longer sequences or when characters perform complex movements.

Text rendering remains problematic for most systems. When asked to generate scenes containing text—such as signs, documents, or screens—AI video tools typically produce gibberish or visually plausible but unreadable text-like patterns. This limitation extends to numbers and other symbolic information.

Physical interactions often lack convincing realism. While simple movements can appear natural, complex interactions between multiple objects or characters frequently exhibit physics violations or unnatural motion. Particularly challenging areas include realistic hand movements, object manipulation, and fluid dynamics.

Resolution and detail limitations also persist. Most systems generate at 720p or 1080p resolution, with noticeable quality degradation when attempting higher resolutions. Fine details often lack consistency frame-to-frame, creating subtle flickering or shifting effects in textures and small elements.

Finally, all current systems impose strict content policies that prevent generation of various categories of content, including realistic depictions of public figures, explicit material, and potentially harmful imagery.

MagicLight FAQs: What types of videos work best with AI generation?

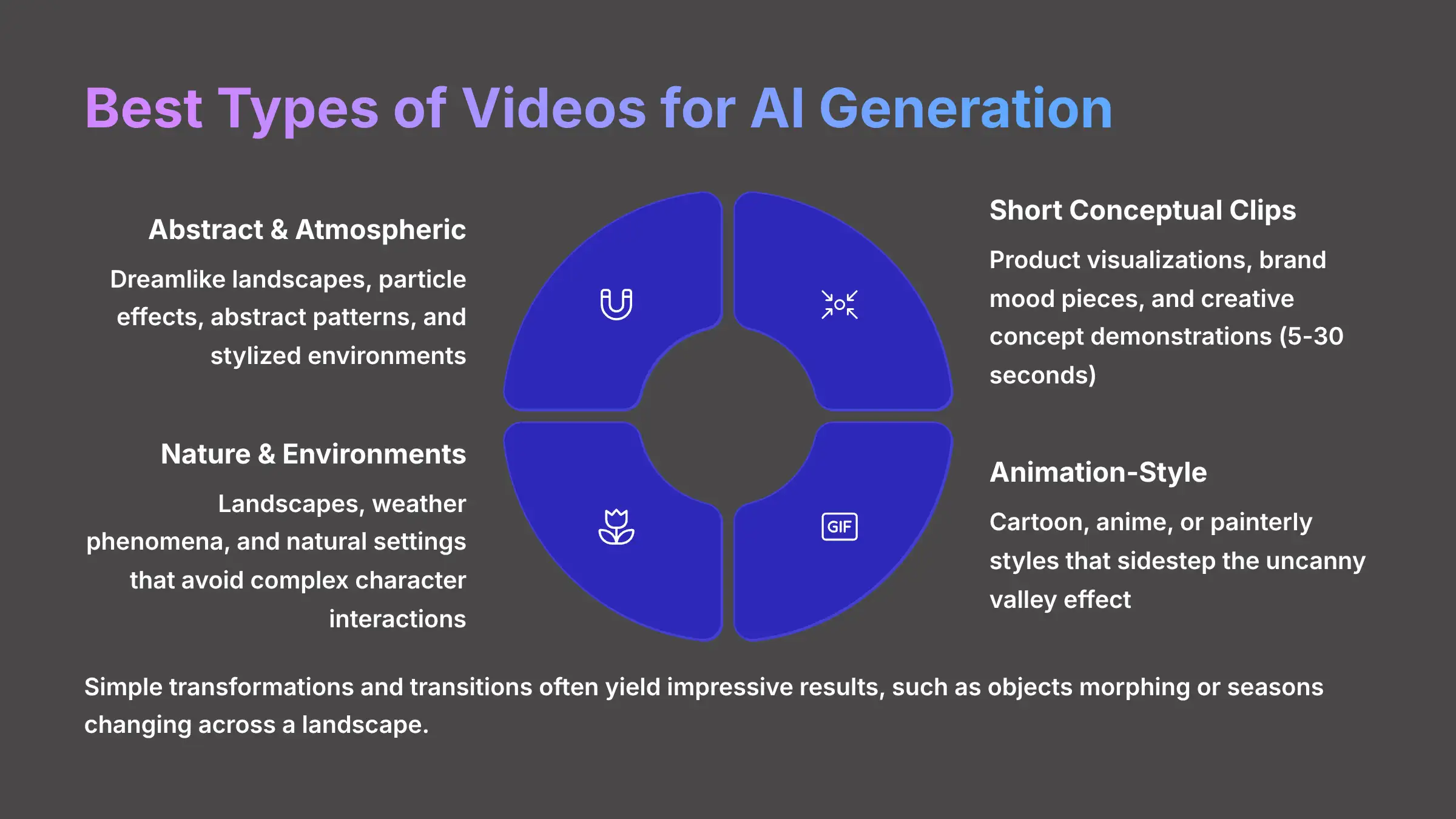

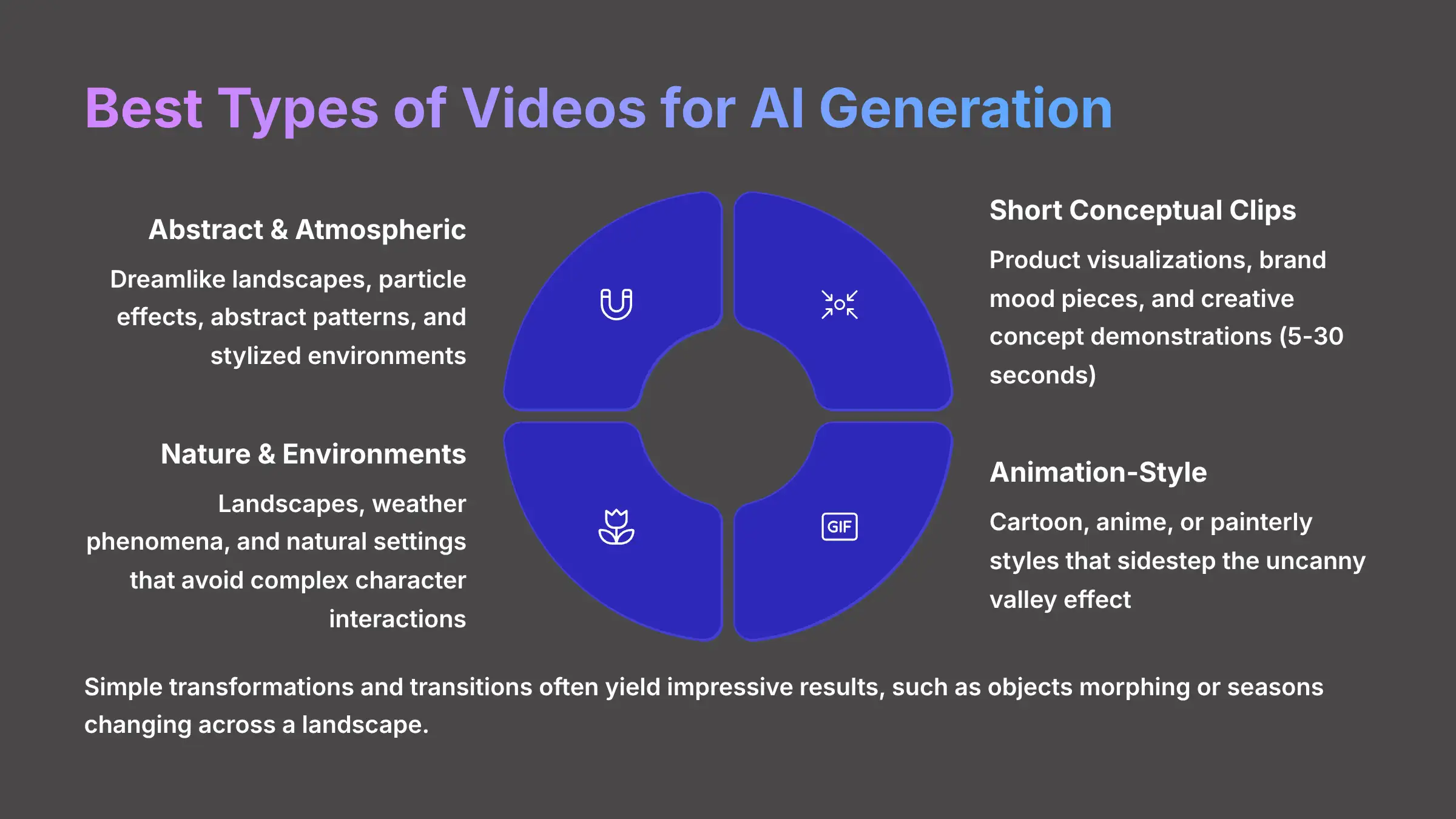

AI video generation excels at certain types of content while struggling with others, making it important to align your creative goals with the technology's current strengths. Understanding these optimal use cases helps maximize results and avoid frustration.

Abstract and atmospheric videos represent one of the strongest applications. AI systems excel at generating mood-driven visuals like dreamlike landscapes, particle effects, abstract patterns, and stylized environments. These applications don't require precise physical accuracy or character consistency, playing to the technology's creative strengths while minimizing its limitations.

Short conceptual clips also perform exceptionally well. Product visualizations, brand mood pieces, and creative concept demonstrations that require only 5-30 seconds of content can achieve impressive results. These brief formats avoid the consistency challenges that emerge in longer generations while still delivering high visual impact.

Animation-style content often outperforms attempts at photorealism. By embracing stylized aesthetics—like cartoon, anime, or painterly styles—creators can sidestep the uncanny valley effect that sometimes occurs with AI-generated human figures. These stylistic choices make minor inconsistencies less noticeable while creating visually distinctive content.

Nature and environment videos typically generate with remarkable quality. Landscapes, weather phenomena, and natural settings benefit from AI's training on vast amounts of such footage. These scenes generally avoid complex character interactions while showcasing the technology's ability to create beautiful, dynamic environments.

Simple transformations and transitions often yield impressive results. Videos showing one object morphing into another, day turning to night, or seasons changing across a landscape leverage the technology's interpolation capabilities effectively.

For creators seeking to tell longer narratives, the most effective approach combines AI-generated segments with traditional footage or uses AI primarily for background elements and special effects rather than as the sole production method. To see practical applications of these techniques, explore our MagicLight Tutorial on enhancing portraits with AI-generated lighting effects.

MagicLight FAQs: How can I achieve better consistency in AI-generated videos?

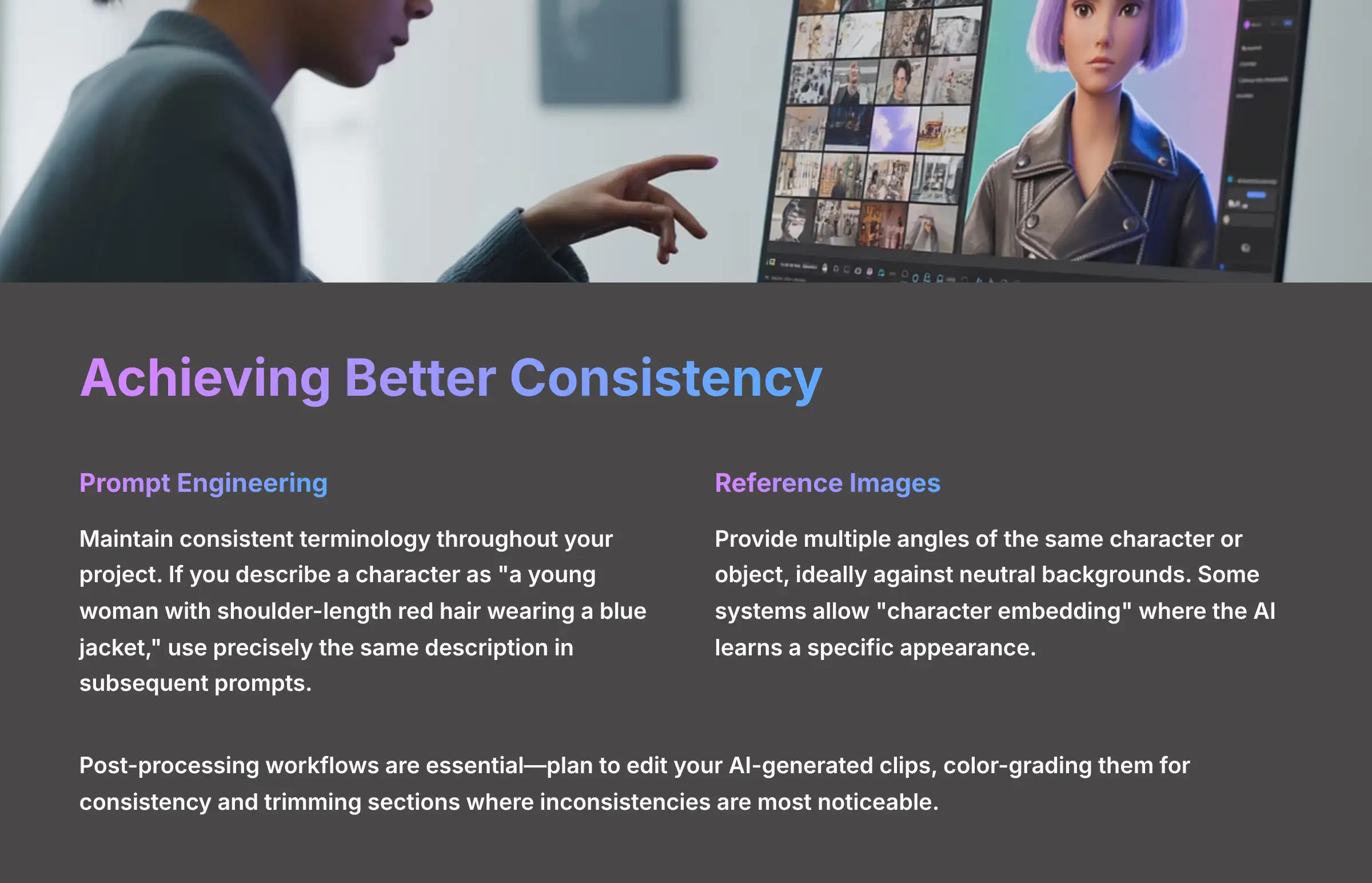

Achieving consistency in AI-generated videos remains one of the greatest challenges for creators, but several effective techniques can significantly improve results. Implementing these strategies will help you produce more cohesive and professional-looking content.

Prompt engineering is perhaps the most crucial skill to develop. When writing prompts, maintain consistent terminology throughout your project. If you describe a character as “a young woman with shoulder-length red hair wearing a blue jacket” in one prompt, use precisely the same description in subsequent prompts. Even minor variations in wording can result in visual differences. Additionally, include persistent style descriptors (like “cinematic lighting” or “shot on 35mm film”) in every prompt to maintain a consistent visual aesthetic.

Reference image techniques have proven highly effective for character consistency. Many platforms now allow you to upload reference images that the AI will use as guidance. For best results, provide multiple angles of the same character or object, ideally against neutral backgrounds. Some advanced systems even allow “character embedding” or “concept training” where the AI learns a specific appearance that can be referenced with a simple tag in future prompts.

Scene composition strategies can significantly improve continuity. When creating a sequence of clips meant to connect, include overlapping elements in your prompts. For example, if generating two consecutive scenes in a forest, specify identical background elements that will appear in both scenes. This creates visual anchors that help the viewer's brain perceive continuity even if minor details change.

Post-processing workflows are essential for professional results. Plan to edit your AI-generated clips, color-grading them for consistency and trimming sections where inconsistencies are most noticeable. Transitions between clips can strategically mask potential discontinuities, while careful sound design helps direct viewer attention away from minor visual inconsistencies.

Finally, consider a hybrid approach for critical projects. Use AI generation for backgrounds, establishing shots, or transition sequences, while filming key character moments or detailed interactions conventionally. This leverages each method's strengths while minimizing limitations.

MagicLight FAQs: What are the costs associated with AI video generation?

The cost structure for AI video generation varies significantly across platforms, with pricing models designed to accommodate different usage patterns and professional needs. Understanding these cost factors helps creators budget effectively and choose the right service for their projects.

Subscription-based models dominate the industry, typically offering tiered plans with different features and generation allowances. Entry-level plans generally range from $15-30 monthly, providing limited resolution options (usually 720p), shorter maximum clip lengths, and a set number of generations (often 50-200 per month). Professional tiers range from $50-150 monthly, offering higher resolutions (1080p or 4K), longer clip durations, priority processing, and increased generation limits. Enterprise plans with custom pricing provide the highest quality outputs, dedicated support, and specialized features.

Credit-based systems offer an alternative approach used by several platforms. These services charge per generation based on factors like resolution, length, and processing priority. Typical costs range from $0.50-5.00 per generation depending on these parameters, with bulk credit purchases offering discounted rates. This model benefits occasional users who don't need a full subscription.

Resolution significantly impacts pricing across all platforms. Generating at 720p might cost half as much as 1080p, while 4K commands premium pricing when available. Similarly, clip duration directly affects cost—a 30-second clip typically costs 3-5 times more than a 10-second clip due to the increased computational resources required.

Additional features often carry supplemental costs. Character consistency tools, style preservation options, advanced editing capabilities, and commercial usage rights frequently require higher-tier subscriptions or additional payments. Some platforms also charge extra for removing watermarks or accessing premium visual styles.

When budgeting for AI video projects, consider not just the direct generation costs but also potential post-processing expenses. Most professional workflows still require traditional editing, sound design, and color correction to achieve broadcast-quality results, adding to the total project cost.

MagicLight FAQs: What are the legal and ethical considerations for AI-generated videos?

The legal and ethical landscape surrounding AI-generated videos presents complex considerations that creators must navigate carefully. Understanding these issues is essential for responsible creation and to avoid potential legal complications.

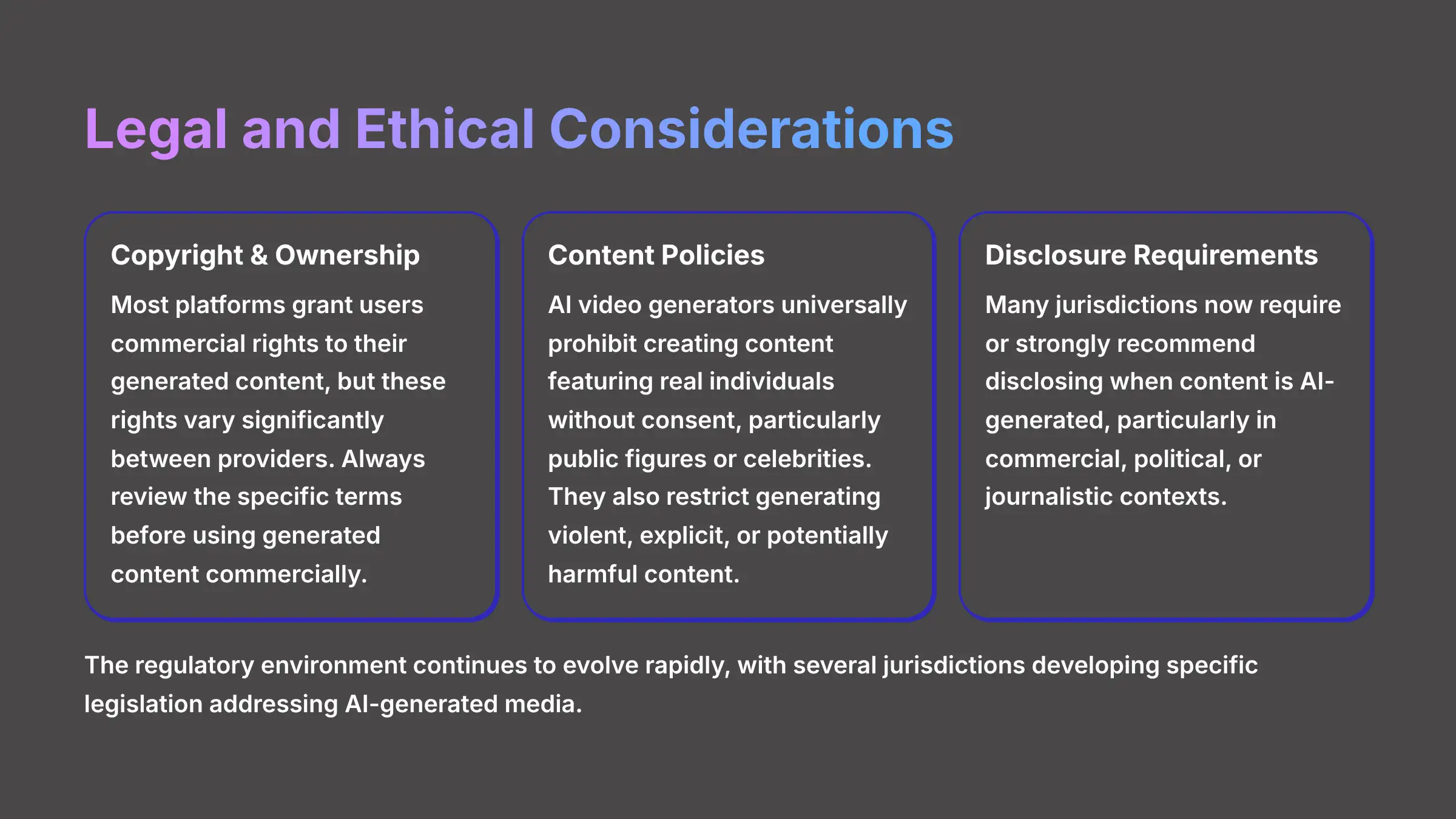

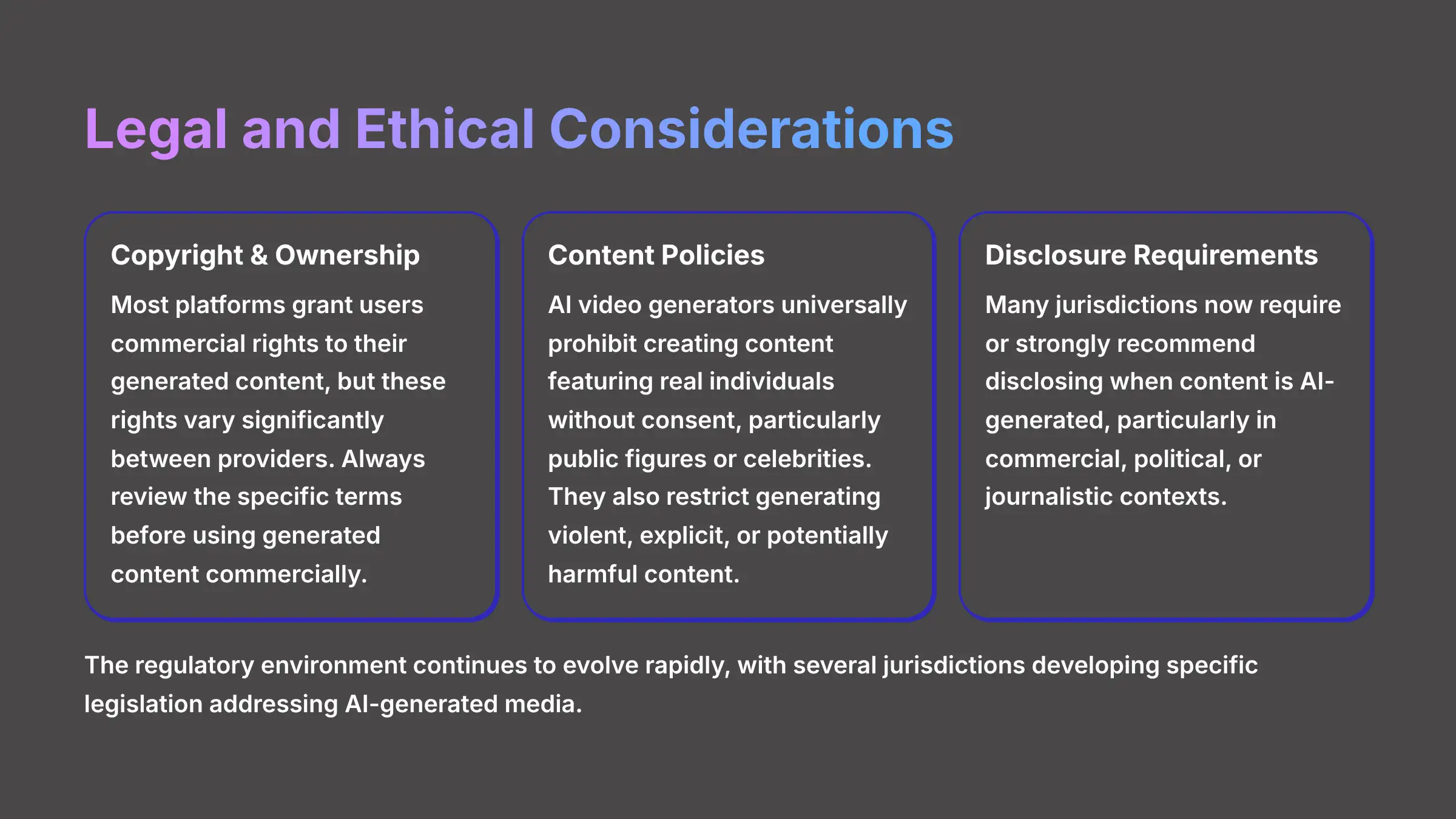

Copyright and ownership questions remain at the forefront of legal concerns. Most AI video platforms grant users commercial rights to their generated content through their terms of service, but these rights vary significantly between providers. Some offer full ownership, while others provide limited licenses that restrict certain uses. Always review the specific terms of your chosen platform before using generated content commercially. Additionally, the training data used by these AI systems has raised questions about derivative works and potential copyright infringement, an area where case law is still developing.

Content policies impose important restrictions across all platforms. AI video generators universally prohibit creating content featuring real individuals without consent, particularly public figures or celebrities. They also restrict generating violent, explicit, or potentially harmful content. These policies are enforced through both technical filters and terms of service agreements, with violations potentially resulting in account termination.

Disclosure requirements are increasingly becoming standard practice. Many jurisdictions now require or strongly recommend disclosing when content is AI-generated, particularly in commercial, political, or journalistic contexts. This transparency helps maintain audience trust and prevents potential deception. Several industry groups have developed standardized disclosure frameworks that creators can adopt.

Ethical considerations extend beyond legal requirements. Responsible creators consider the potential societal impact of their AI-generated content, particularly regarding representation, stereotyping, and the potential for misinformation. The technology's ability to create convincing but fictional scenarios carries special responsibility.

Looking forward, the regulatory environment continues to evolve rapidly. Several jurisdictions are developing specific legislation addressing AI-generated media, with particular focus on disclosure requirements, copyright implications, and preventing harmful applications. Staying informed about these developments is crucial for creators working with this technology.

MagicLight FAQs: How can beginners get started with AI video generation?

Getting started with AI video generation is more accessible than ever, even for complete beginners with no technical background. Following a structured approach will help you quickly develop skills and create impressive results.

Begin by selecting the right platform for your needs. For absolute beginners, Pika Labs offers one of the most user-friendly interfaces with excellent results for simple prompts. Runway provides a slightly steeper learning curve but offers more creative control and professional features. Most platforms offer free trials or limited free tiers that allow you to experiment before committing financially. Sign up for 2-3 different services to compare their interfaces and results firsthand.

Understanding prompt fundamentals will dramatically improve your initial results. Start with simple, clear descriptions rather than complex instructions. Include specific details about the subject, setting, lighting, camera angle, and motion. For example, instead of “a beautiful forest,” try “a sunlit redwood forest with morning mist, slow panning shot, cinematic lighting, 35mm film look.” This level of specificity guides the AI toward your creative vision.

Begin with short, simple projects to build confidence. Single-scene concepts with minimal movement work best for beginners. As you gain experience, gradually increase complexity by adding camera movements, multiple subjects, or more detailed environments. Document successful prompts in a personal library for future reference.

Join online communities dedicated to AI video creation for invaluable learning resources. Platforms like Discord servers for specific AI tools, Reddit communities like r/AIVideoGeneration, and specialized forums offer prompt libraries, tutorials, and feedback from experienced users. These communities frequently share techniques for overcoming common challenges.

Develop a basic understanding of post-processing workflows. Even simple editing in accessible tools like CapCut or DaVinci Resolve can significantly enhance AI-generated clips. Learning to trim clips, adjust colors, and add sound dramatically improves final quality.

Finally, embrace experimentation. AI video generation often produces unexpected results that can inspire new creative directions. Set aside time specifically for creative exploration rather than focusing exclusively on achieving precise predetermined outcomes.

For comprehensive step-by-step guidance, our detailed MagicLight Tutorial provides practical instructions for creating professional-quality videos with AI assistance.

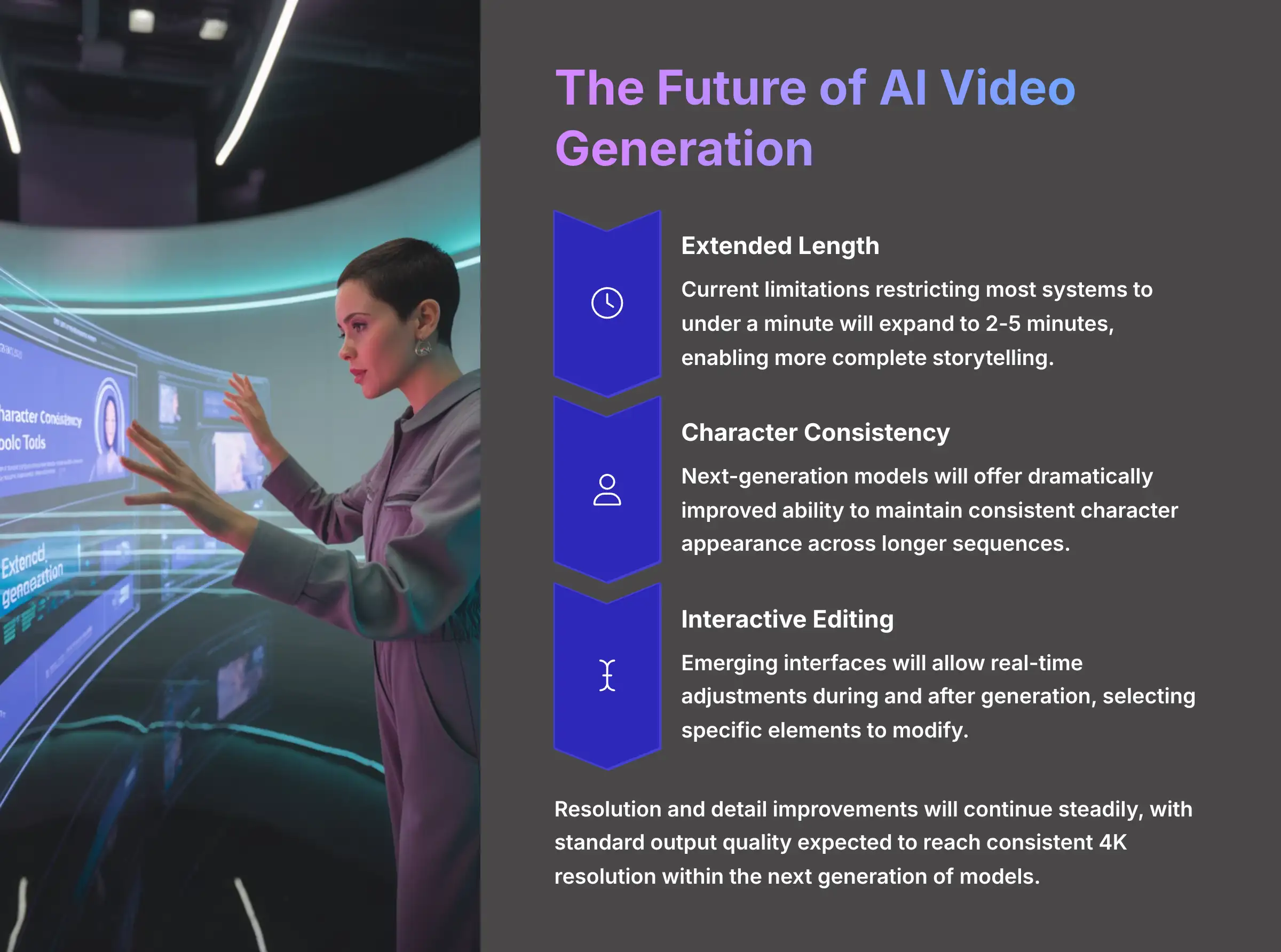

MagicLight FAQs: How will AI video generation evolve in the near future?

AI video generation is undergoing rapid evolution, with several key developments expected to reshape the technology's capabilities within the next 12-24 months. Understanding these trends helps creators prepare for emerging opportunities and stay ahead of the curve.

Extended generation length represents one of the most anticipated advancements. Current limitations restricting most systems to under a minute will likely expand significantly, with several major platforms already testing 2-5 minute generation capabilities in closed beta environments. This extension will enable more complete storytelling and reduce the need for complex stitching of multiple clips.

Character consistency is receiving intense development focus. Next-generation models will offer dramatically improved ability to maintain consistent character appearance across longer sequences and complex actions. Advanced character embedding systems will allow creators to “teach” the AI specific individuals, objects, or environments that can be reliably reproduced across multiple generations.

Interactive editing capabilities will transform the workflow. Rather than the current generate-and-hope approach, emerging interfaces will allow real-time adjustments during and after generation. Users will be able to select specific elements within a scene to modify, redirect motion mid-generation, or adjust stylistic elements without starting over.

Multi-modal input processing will expand creative possibilities. Future systems will simultaneously process combinations of text prompts, reference images, audio cues, and even rough sketches or storyboards to generate more precisely controlled outputs. This will give creators much finer control over the final result while maintaining the efficiency of AI generation.

Resolution and detail improvements will continue steadily. The standard output quality is expected to reach consistent 4K resolution within the next generation of models, with significant improvements in fine detail rendering, particularly for challenging elements like text, faces, and hands.

Integration with traditional production workflows will accelerate. Expect deeper compatibility with industry-standard editing software, specialized plugins for major creative suites, and purpose-built tools that seamlessly blend AI generation with conventional footage and effects.

These advancements will progressively address current limitations while opening entirely new creative possibilities, further establishing AI video generation as an essential tool in the modern creator's toolkit. For insights into how these developments might affect specific platforms, read our comprehensive MagicLight Review analyzing long-form video capabilities.

Ready to dive deeper into AI video generation? Explore our comprehensive collection of guides, comparisons, and tutorials at AI Video Generators Free. Whether you're looking for detailed platform reviews, step-by-step tutorials, or the latest industry insights, we've got you covered with expert analysis and practical guidance.

For specific questions about MagicLight and other AI video tools, check out our extensive MagicLight FAQs section where we answer the most common questions from creators just like you.

Leave a Reply